Human Computer Integration Lab

Computer Science Department, University of Chicago

Essays (long-form writings from our lab)

(Back to all essays)Essay #4: UIST in the era of AI: reflections by Yudai Tanaka

Oct 5th, 2025

The mood of a conference often reflects what the community is collectively thinking about at that moment. At this year’s UIST (2025), I felt our community trying to define what UIST is in the era of AI. In the past two years, we seemed a bit overwhelmed by the wave of generative and LLM tools. But this year felt different: I started to see how UIST might evolve while maintaining its unique identity. A few key moments at the conference made me feel this way, and this is what this short reflection is about! (p.s.: If you were looking for an essay showing our UIST contributions from 2025 and our UIST video showing all our demos in one take, check it here.)

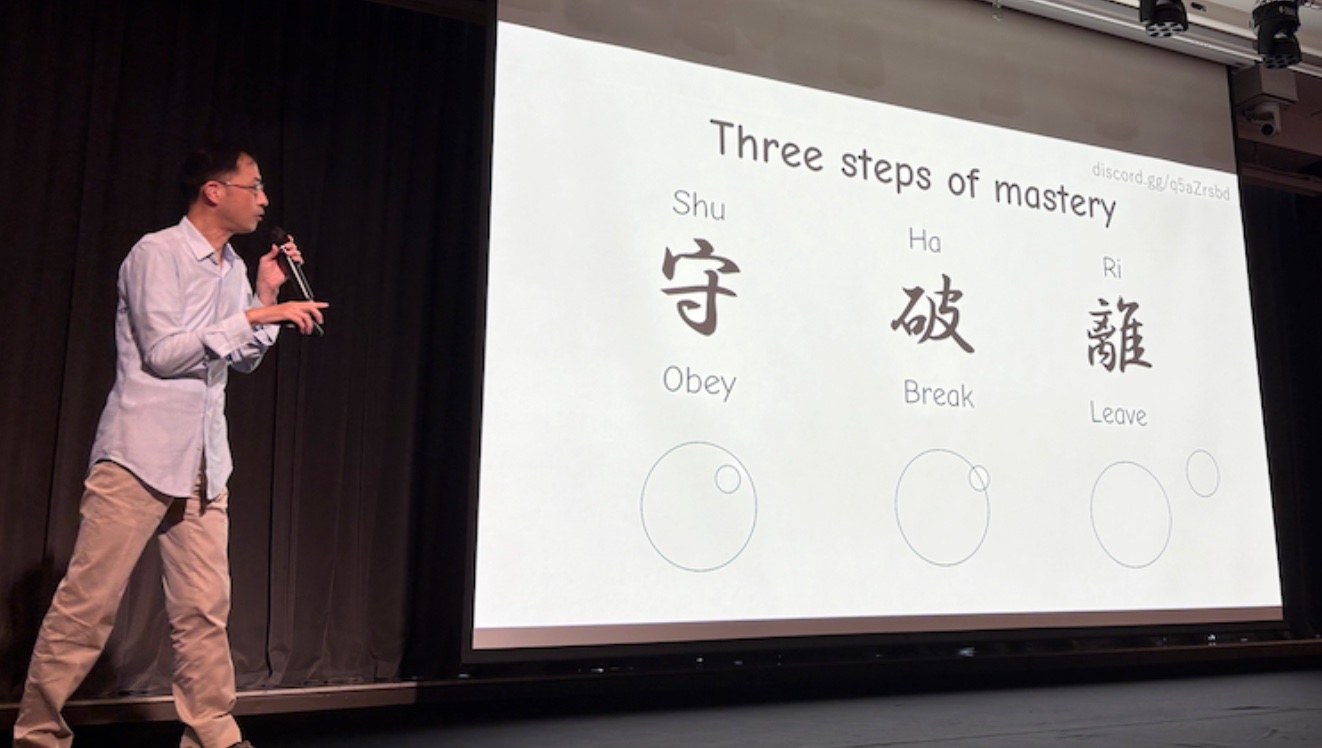

In his UIST vision talk, Prof. Takeo Igarashi questioned the common view that the utility of generative AI is about making things easier and faster (you can read the abstract here). He warned that this mindset risks filling the world with “slops”—shallow and mass-produced content. Instead, he asked how AI could help us engage more deeply with our creative process and amplify our intent rather than overwrite it. He ended with a metaphor drawn from Asian craftsmanship (the three steps of mastery: obey (守), break (破), leave (離)). It was a reminder that creativity isn’t just about speed or efficiency, but growth and reflection. Coincidentally, the final day of UIST lined up with the release of Sora2—a moment that almost felt like an antithesis to Takeo’s message. Watching the flood of auto-generated memes on social media, I couldn’t help but think about how quickly the “world of slops” he warned about was materializing.

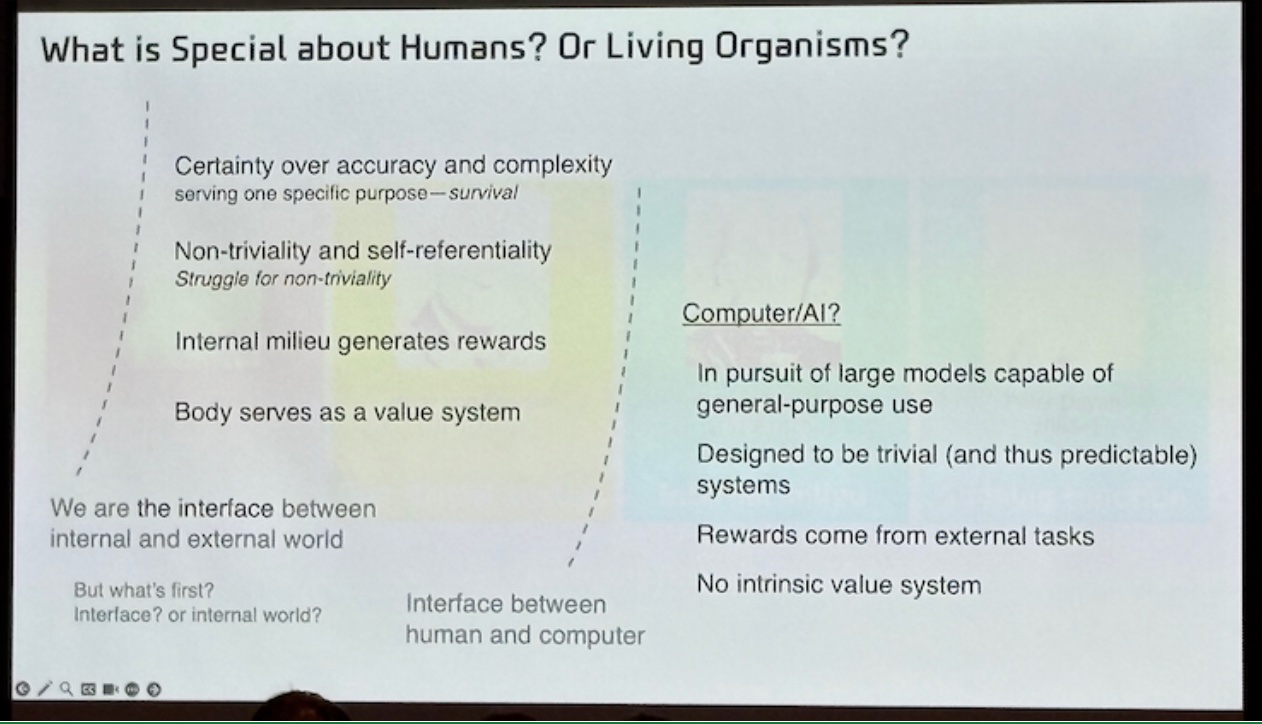

The closing keynote, by Prof. Choong-Wan Woo, was also about the relationship between humans and AI, but from a

completely different perspective. Coming from a background in system dynamics and neuroscience, Woo talked about

what makes humans different from AI (and vice versa): our internal purpose, embodied value systems, and

self-referentiality.

In other words, life is not just about computation—it’s about having intrinsic motivations

and an internal model of ourselves. The talk claimed that these are things current AI systems fundamentally lack

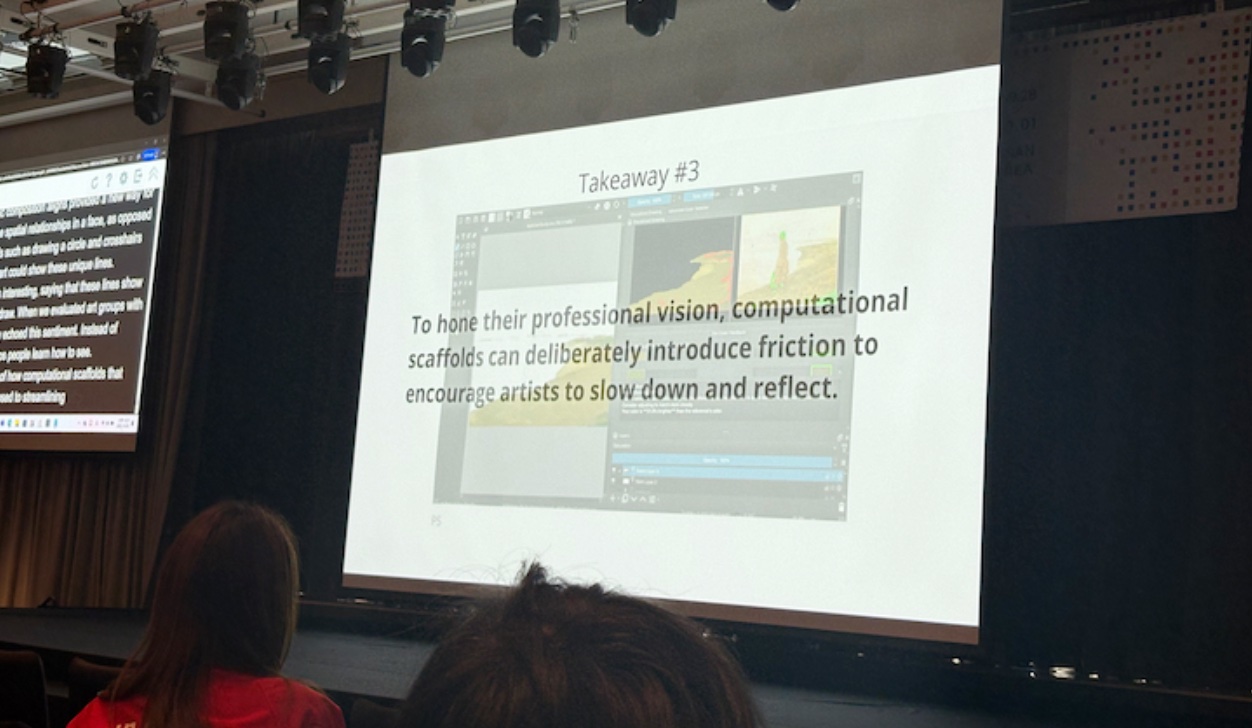

(though not everyone might agree), but it resonated with Igarashi’s point (and many others in the technical program,

e.g., Jiaju Ma et al.’s best paper on computational scaffolding for drawing) on not just “help me create,” but

“help me reflect more deeply, and grow.”

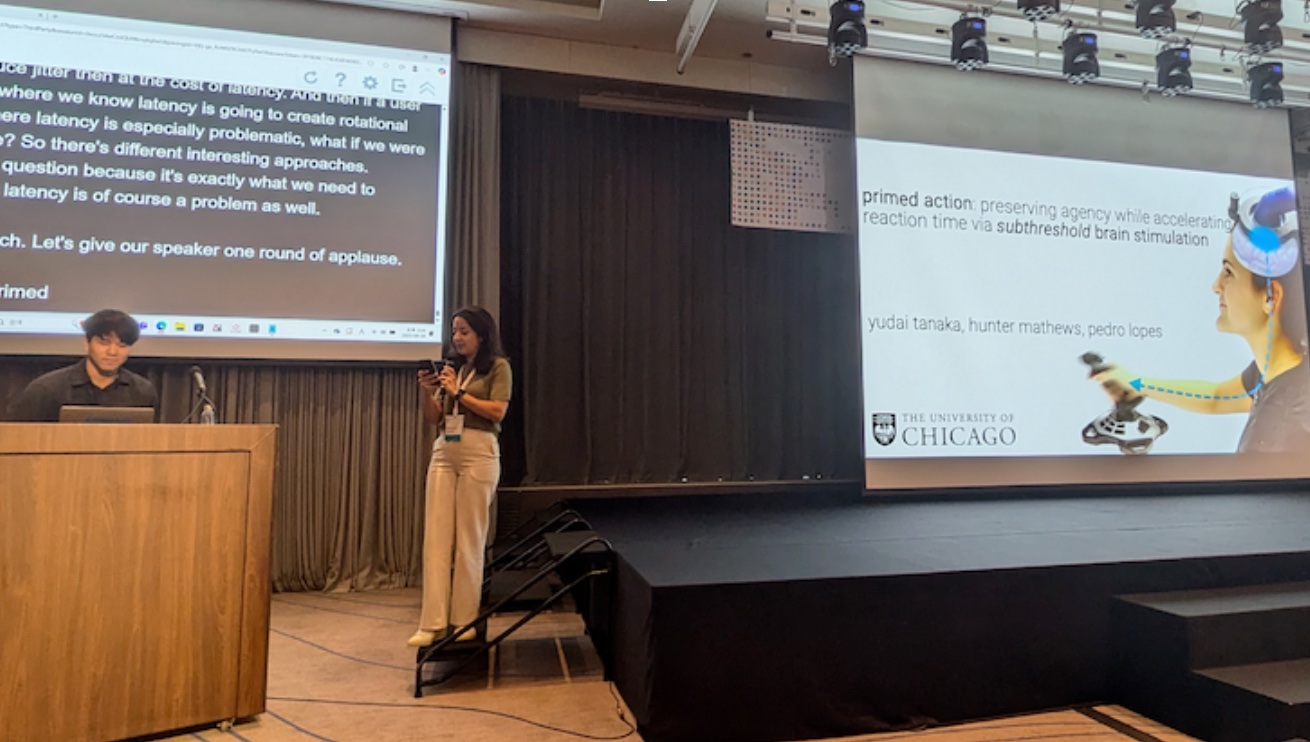

That idea really resonated with what I’ve been exploring myself—for example, in my recent UIST paper on how to design embodied assistance that stays aligned with the user’s intention and voluntary action (Primed Action). And as someone who works more on the device side, I also see this as a broader design principle for interfaces that physically integrate with the body—echoing Pedro’s vision talk as well.

This conference showed how UIST is trying to define its unique direction as a research community in the era of AI. But another equally important quality—the bedrock for everything—is how much people in this community care about sharing their knowledge and inspiring others. I witnessed many great moments where showcases and demos of both hardware and software sparked spontaneous, exciting discussions. I wish I had photo-documented all of them, but here are just a few highlights that I captured with my camera: Vimal Mollyn's talk on EclipseTouch, Kensuke Katori's talk on vestibular stimulation, as well as the aforementioned keynotes from Prof. Woo and Prof. Igarashi.

Finally, what makes UIST special isn’t just the research, but the people who treat knowledge as something to be shared, discussed, and reflected upon rather than produced in quantity. This spirit of openness and curiosity is what keeps the community alive—and what motivates me to keep contributing to it.