Human Computer Integration Lab

Computer Science Department, University of Chicago

We engineer interactive devices that integrate directly with the user's body—we believe these devices are the natural succession to wearable interfaces. One example of our human-computer integration is our work on interactive devices based on electrical muscle stimulation (EMS). These devices electrically actuate the user's body, enabling touch/forces in VR, or allowing everyday objects to teach their users how they should be operated—our wearables achieve this without the weight and bulkiness of conventional robotic exoskeletons. These devices gain their advantages, not by adding more technology to the body, but from borrowing parts of the body as input/output hardware, resulting in devices that are not only exceptionally small, but that also implement a novel interaction model, in which devices integrate directly with the body. We have been able to generalize this concept to new modalities, including novel ways to interface with a user's sense of temperature, smell, touch and even rich-skin sensations. |

|

We think bodily-integrated interactive devices are beneficial as they enable new modes of reasoning with computers, going beyond just symbolic thinking (reasoning by typing and reading language on a screen). While this physical integration between human and computer is beneficial in many ways (e.g., faster reaction time, realistic simulations in VR/AR, faster skill acquisition, etc.), it also requires tackling new challenges, such as improving the precision of muscle stimulation or the question of agency: do we feel in control when our body is integrated with an interface? We explore these questions, together with neuroscientists, by understanding how our brain encodes the feeling of agency to improve the design of this new type of integrated interfaces.

Moreover, we are also exploring how novel computer-interfaces can provide assistance and promote attitude change in critical societal challenges, namely reducing electronic waste; such as by changing our relationship with technology, or by pushing forward e-waste reuse via computationally-driven recycling.

The Human Computer Integration research lab is led by Prof. Pedro Lopes at the Computer Science Department of the University of Chicago.

Team

Pedro Lopes (CV)

Assoc. Prof.

Yun Ho

PhD student

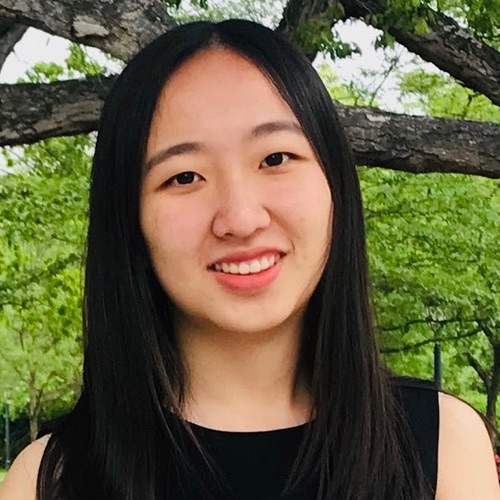

Jasmine Lu

PhD student

Romain Nith

PhD Student

Yudai Tanaka

PhD Student

Wen Li

PhD collab (TianLab)

Lonnie Chien

PreDoc

Mithil Guru

Grad Student at Large

Leon Zhao

PreDoc

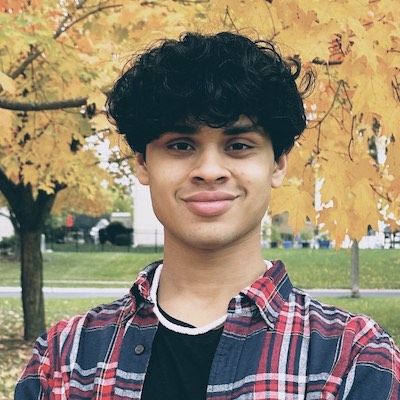

Andre De La Cruz

Ugrad

Kai Lee

Ugrad

Janice Hixton

TA

If were you searching for someone that you did not find, perhaps they are one of our alumni. We are a LGBTQ+ ally lab. Want to join our lab? Check out our apply page.

News

Publications

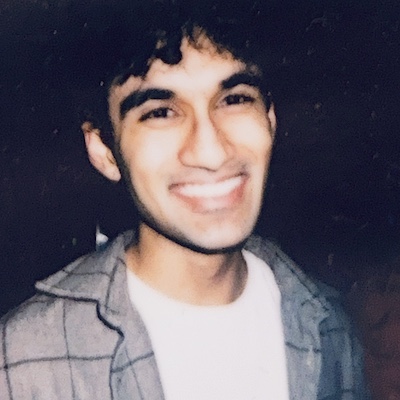

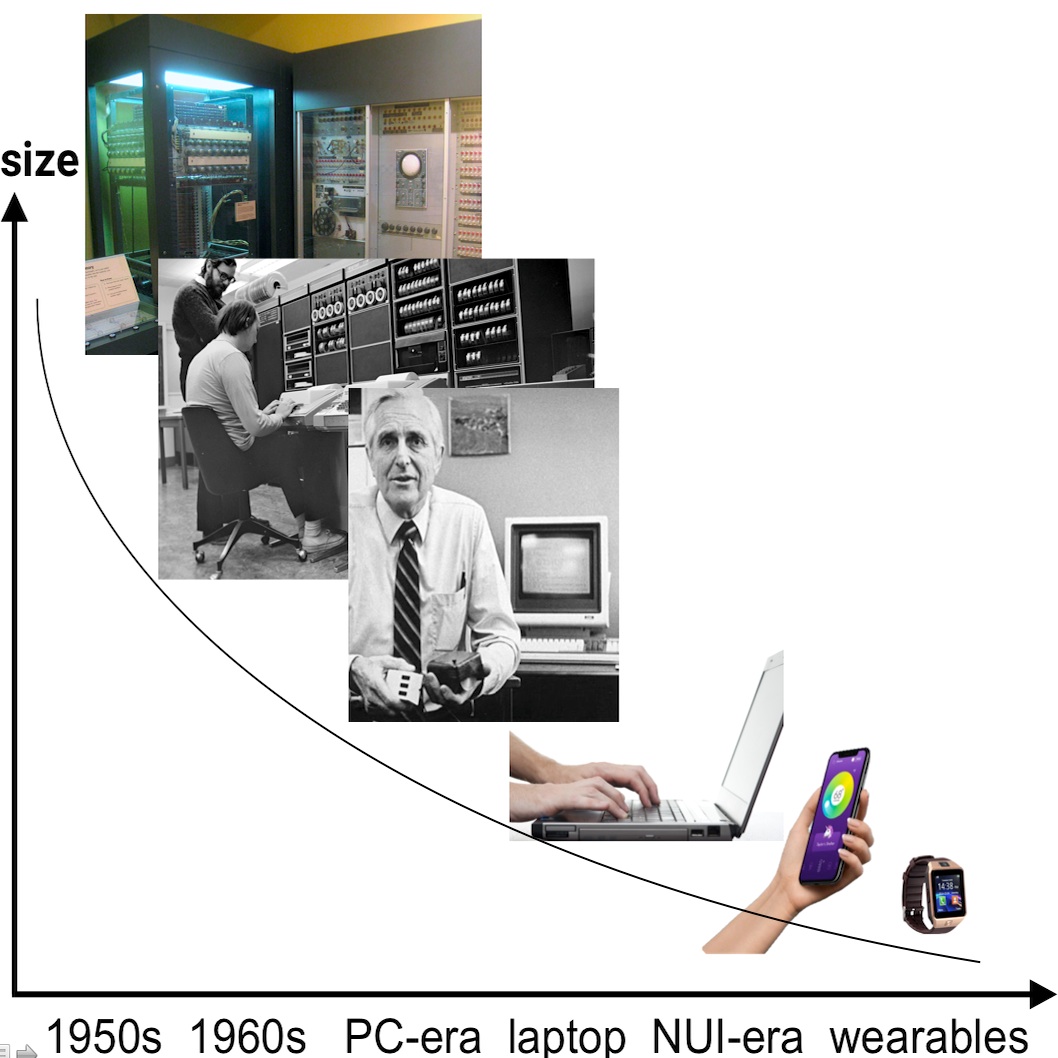

What if the “I” in HCI stands for Integration?

Pedro Lopes. In Proc. UIST'25 (Vision Talk)

In this ACM UIST "Vision Talk" (video), I explore the future of Human-Computer Interaction (HCI) by re-thinking the “I” in HCI as standing for “Integration” rather than “Interaction.” Analyzing the evolution of computing interfaces, from desktop computers to wearables, reveals a trend toward miniaturization and closer integration with the body. I posit a new generation of devices that integrate AI-interfaces with brain/muscle stimulation to provide cognitive/physical assistance in a way that does not feel disempowering, since the user’s body is deeply integrated. This provides a shift towards integrated interfaces that directly assist users’ bodies rather than replacing them with external robots. This challenges us to envision a new type of AI interfaces that integrate with humans, enabling new physical modes of reasoning and self-expression. To this end, I argue the main challenges are (1) preserving the user’s sense of agency (e.g., keep users in control); (2) avoiding device-dependency (e.g., devices that teach rather than automate); and (3) mapping societal implications.

UIST vision keynote (live talk) UIST'25 Vision Talk Accompanying Paper

Primed Action: Preserving Agency while Accelerating Reaction Time via Subthreshold Brain Stimulation

Yudai Tanaka, Hunter Mathews, Pedro Lopes. In Proc. UIST'25 (paper)

We present Primed Action, a novel interface concept that leverages this type of TMS-based faster reactions. What sets Primed Action apart from prior work that uses muscle stimulation to “force” faster reactions is that our approach operates below the threshold of movement—it does not trigger involuntary motion, but instead it “primes” neurons in the motor cortex by enhancing their neural excitability. As we found in our study, Primed Action best preserved participants’ sense of agency than existing interactive approaches based on muscle stimulation (e.g., Preemptive Action). We believe this novel insight enables new forms of haptic assistance that do not sacrifice agency, which we demonstrate in a set of interactive experiences (e.g., VR sports training).

UIST'25 paper Appendix (deeper analysis) Data/code video UIST talk (live) Slides

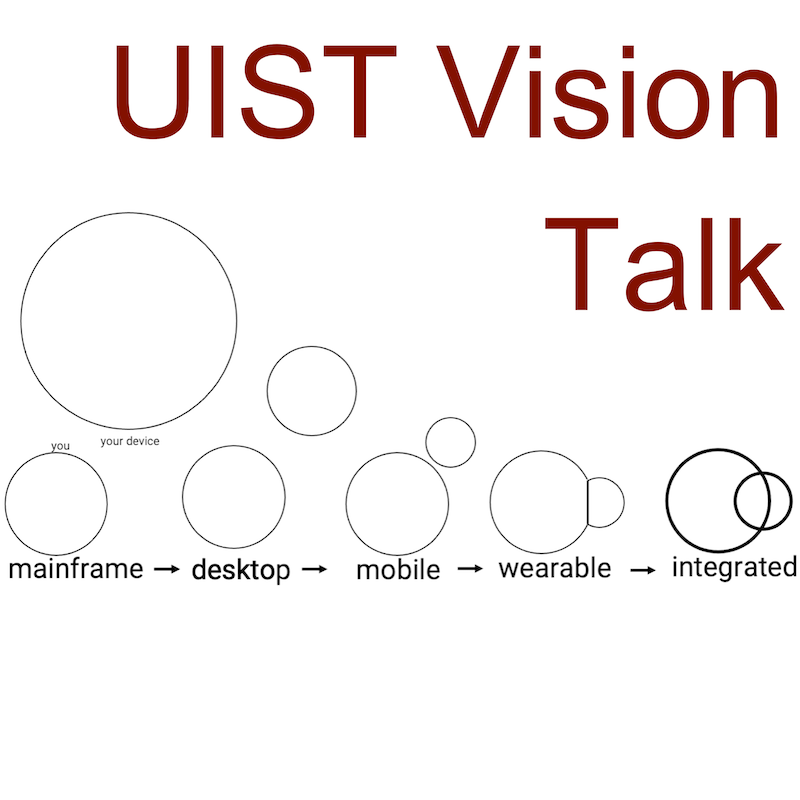

Vestibular Stimulation Enhances Hand Redirection

Kensuke Katori, Yudai Tanaka, Yoichi Ochiai, Pedro Lopes. In Proc. UIST'25 (paper)

We demonstrate how the vestibular system (i.e., the sense of balance) influences the perception of hand position in VR. By exploiting this via galvanic vestibular stimulation (GVS), we can enhance the degree to which we can redirect the user’s hands in VR without them noticing it. The trick is that a GVS-induced subtle body sway aligns with the user’s expected body balance during hand redirection. This alignment reduces the sensory conflict between the expected and actual body balance. Our user study validated that our approach raises the detection threshold of VR hand redirection by 45~55%. Our approach broadens the applicability of hand redirection (e.g., compressing a VR space into an even smaller physical area).

UIST'25 paper video UIST talk (live) Slides

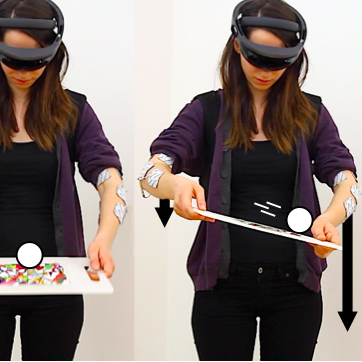

VR Side-Effects: Memory & Proprioceptive Discrepancies After Leaving Virtual Reality

Antonin Cheymol, Pedro Lopes. In Proc. UIST'25 (paper)

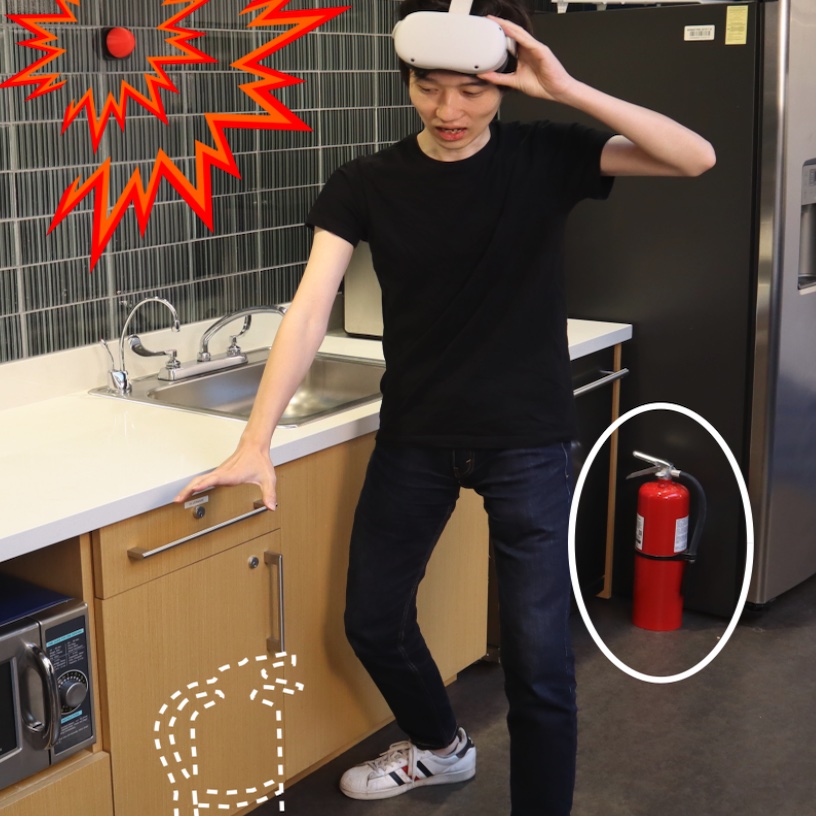

Our brain’s plasticity rapidly adapts our senses in VR, a phenomenon leveraged by techniques such a redirected walking, handredirection, etc. However, while most of HCI is interested in how users adapt to VR, we turn our attention to how users need to adapt their senses when returning to the real-world. We found that, after leaving VR, (1) participants’ hands remained redirected by up to 7cm, indicating residual proprioceptive distortion; and (2) participants incorrectly recalled the virtual location of objects rather than their actual real-world locations (e.g., remembering the location of a VR-extinguisher, even when trying to recall the real one). We discuss the lingering VR side-effects may pose safety or usability risks.

UIST'25 paper video UIST talk (live) Slides code

Adaptive Electrical Muscle Stimulation Improves Muscle Memory

Siya Choudhary*, Romain Nith*, Yun Ho*, Jas Brooks, Mithil Guruvugari, Pedro Lopes. In Proc. CHI'25 (paper)

* authors contributed equally

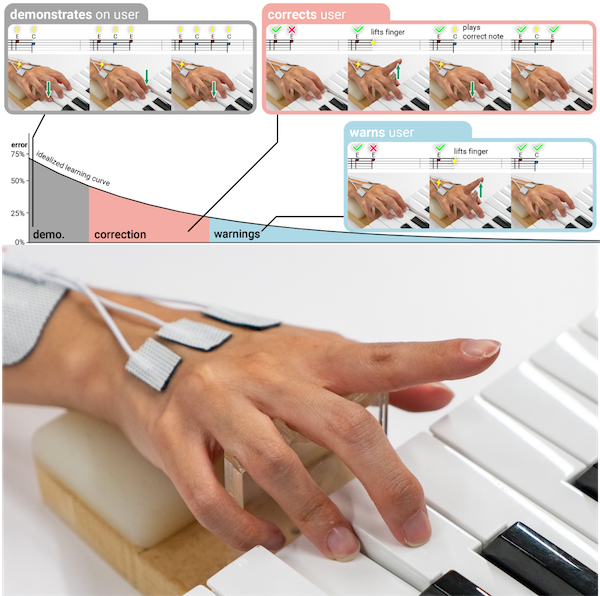

Electrical muscle stimulation (EMS) can assist in learning motor skills. However, existing EMS systems provide static demonstration—actuating the correct movements, regardless of the user’s learning progress. Instead, we propose a novel adaptive-EMS that changes its guidance strategy based on the participant’s performance. The adaptive-EMS dynamically adjusts its guidance: (1) demonstrate by playing the entire sequence when errors are frequent; (2) correct by lifting incorrect fingers and actuating the correct one when errors are moderate; and (3) warn by lifting incorrect fingers when errors are low. We found that adaptive-EMS improved learning outcomes (recall) compared to traditional EMS—leading to improved “muscle memory”.

CHI'25 paper video CHI talk (pre-recorded) CHI talk (live) Slides

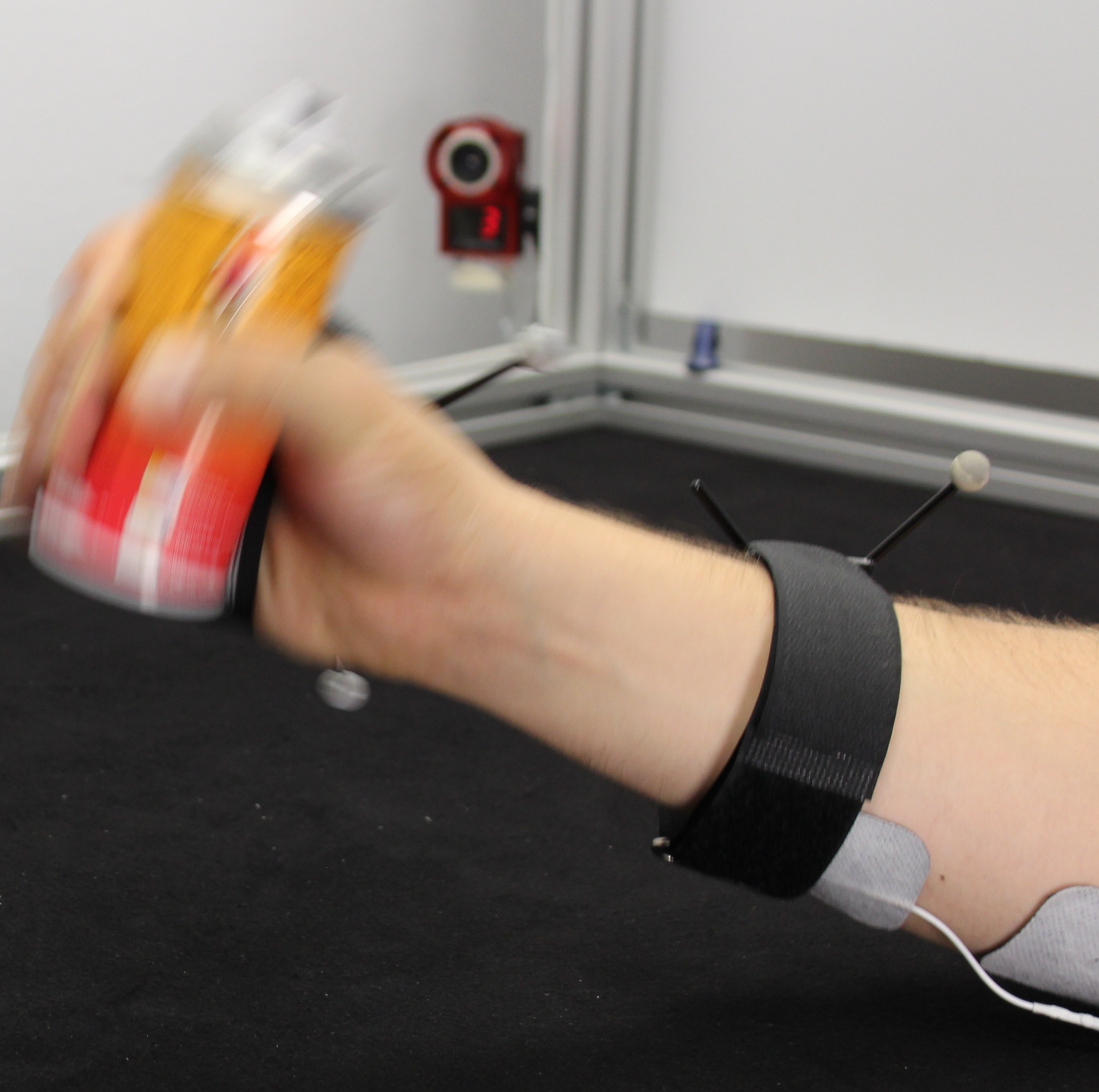

Seeing with the Hands: A Sensory Substitution That Supports Manual Interactions

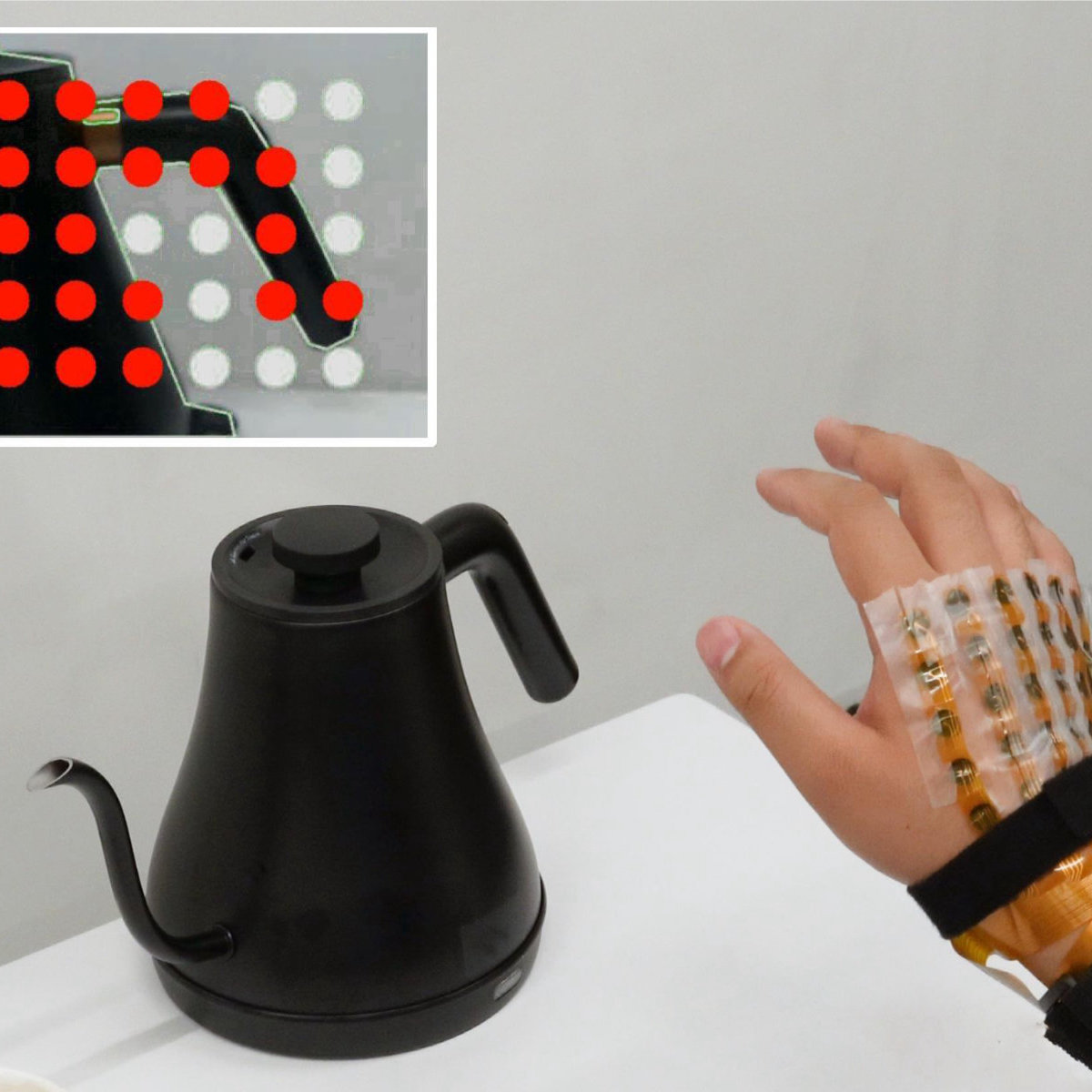

Shan-Yuan Teng*, Gene S-H Kim*, Xuanyou (Zed) Liu*, Pedro Lopes. In Proc. CHI'25 (paper)

* authors contributed equally

Sensory-substitution enables perceiving objects by translating one modality (e.g., vision) into another (e.g., tactile). Despite much exploration in neuroscience and HCI, the camera’s location remains largely unexplored: placed at the eyes’ perspective. Instead, we propose that seeing & feeling information from the hands’ perspective enhances flexibility & expressivity of sensory-substitution to support manual interactions with physical objects. We engineered a back-of-the-hand electrotactile-display that renders tactile images from a wrist-mounted camera, allowing the user’s hand to feel objects while reaching or hovering. We conducted a study with sighted/Blind-or-Low-Vision participants who used our eyes vs. hand tactile-perspectives to manipulate bottles and soldering- irons, etc. We found that all participants found value in using the hands’ perspective as it enables ergonomic object-manipulation.

CHI'25 paper video CHI talk

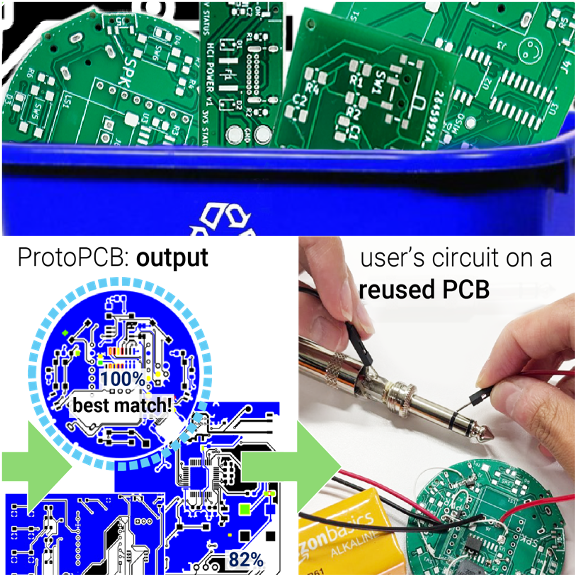

ProtoPCB: Reclaiming Printed Circuit Board E-waste as Prototyping Material

Jasmine Lu, Sai Rishitha Boddu, Pedro Lopes. In Proc. CHI'25 (paper)

We propose an interactive tool that enables reusing printed circuit boards (PCB) as prototyping materials to implement new circuits—this extends the utility of PCBs rather than discards them as e-waste. Our tool takes a user’s desired circuit schematic and analyzes its components and connections to find methods of creating the user’s circuit on discarded PCBs (e.g., e-waste, old prototypes). In our evaluation, we utilized our tool across a diverse set of PCBs and input circuits to characterize how often circuits could be implemented on a different board, implemented with minor interventions (trace-cutting or bodge-wiring), or implemented on a combination of multiple boards—demonstrating how our tool assists with exhaustive matching tasks that a user would not likely perform manually.

CHI'25 paper video CHI talk (pre-recorded) CHI talk (live) Slides code

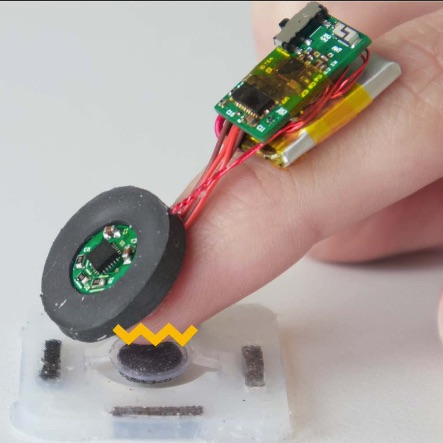

Power-on-Touch: Powering Actuators, Sensors, and Devices during Interaction

Alex Mazursky, Aryan Gupta, Andre de la Cruz, Pedro Lopes. In Proc. CHI'25 (paper)

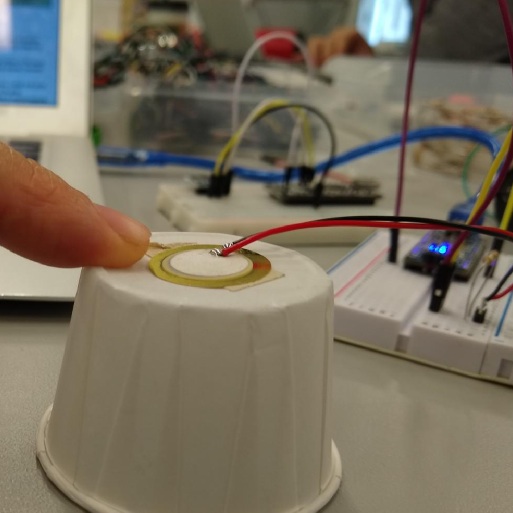

We introduce Power-on-Touch, a novel method for powering devices during interaction. It uses: (1) a wearable-transmitter attached to the user’s body (e.g., fingernail, back of the hand, feet); and (2) receiver-tags embedded in interactive devices, making them battery-free. Many devices only require power during interaction (e.g., TV remotes, digital calipers). We leverage this interactive opportunity by inductively transferring energy from the user’s coil to the device’s coil when in close proximity. Wwe engineered receiver-tags and coils, including thin pancake-coils best-suited for wearables and spherical-coils that receive power omnidirectionally. We believe our technical approach can inspire ubiquitous computing with new ways to scale up the number and diversity of battery-free devices, not just sensors (𝜇Watts) but also actuators (Watts).

CHI'25 paper video CHI talk

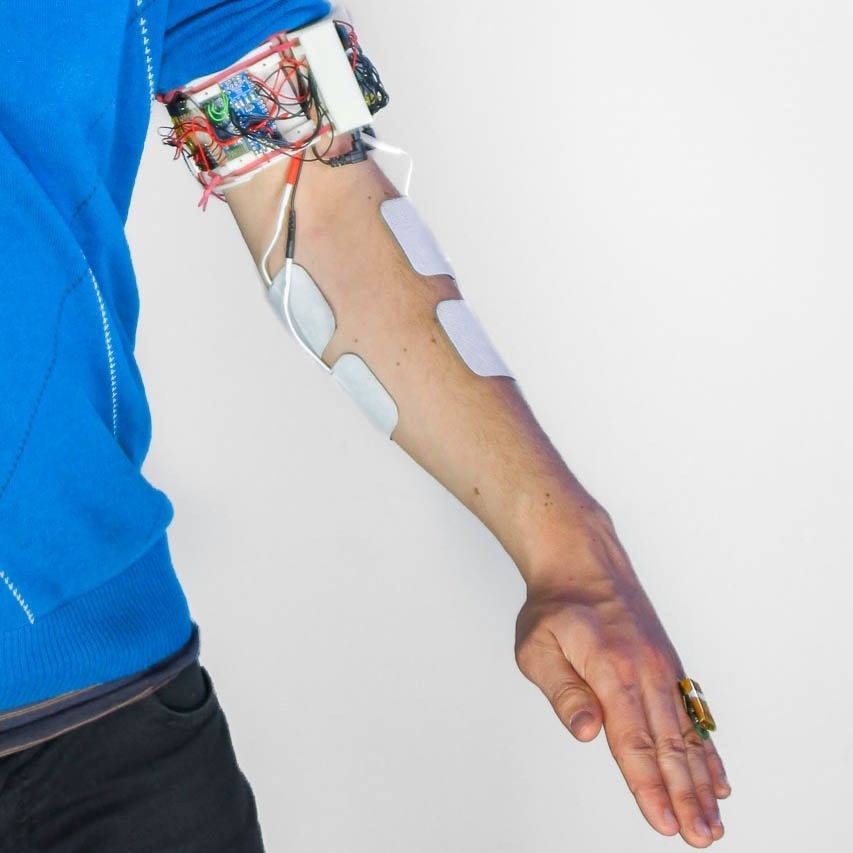

Can a Smartwatch Move Your Fingers? Compact and Practical Electrical Muscle Stimulation in a Smartwatch

Akifumi Takahashi, Yudai Tanaka, Archit Tamhane, Alan Shen, Shan-Yuan Teng, Pedro Lopes. In Proc. UIST'24 (full paper)

UIST honorable mention for best paper award

Smartwatches gained popularity in the mainstream, making them into today’s de-facto wearables. Despite advancements in sensing, haptics on smartwatches is still restricted to simple vibrations. Most smartwatch-sized actuators cannot render strong force-feedback. Simultaneously, electrical muscle stimulation (EMS) promises compact force-feedback but, to actuate fingers requires users to wear many electrodes on their forearms—detracting EMS from being a practical force-feedback interface. To address this, we propose moving the electrodes to the wrist—conveniently packing them in the backside of a smartwatch. We engineered a compact EMS that integrates directly into a smartwatch’s wristband (with a custom stimulator, electrodes, demultiplexers, and communication).

UIST'24 paper video UIST talk (live)

Augmented Breathing via Thermal Feedback in the Nose

Jas Brooks, Alex Mazursky, Janice Hixon, Pedro Lopes. In Proc. UIST'24 (full paper)

We propose a novel method to augment the feeling of breathing—enabling interactive applications to let users feel like they are inhaling more/less air (perceived nasal airflow). We achieve this effect by cooling or heating the nose in sync with the user’s inhalation. Our illusion builds on the physiology of breathing: we perceive our breath predominantly through the cooling of our nasal cavities during inhalation. This work closes the I/O loop on breathing, which was previously only a input modality, but now can be used also for output (e.g., in VR, meditation, mask relief, etc).

UIST'24 paper video UIST talk (live)

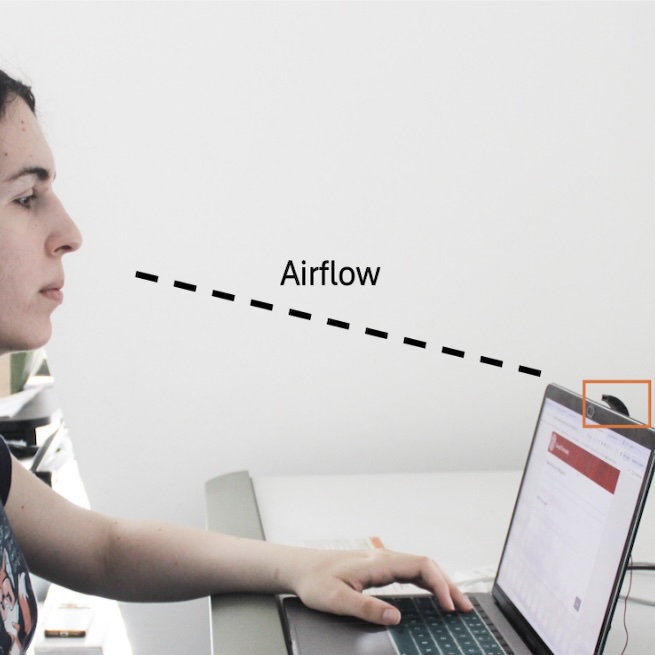

BreathePulse: Peripheral Guided Breathing via Implicit Airflow Cues for Information Work

Tan Gemicioglu, Thalia Viranda, Yiran Zhao, Olzhas Yessenbayev, Jatin Arora, Jane Wang, Pedro Lopes, Alexander T. Adams, Tanzeem Choudhury. In Proc. IMWUT'24 (Ubicomp) (full paper)Guided slow breathing is a promising intervention for stress and anxiety, with peripheral breathing guides being explored for concurrent task use. However, their need for explicit user engagement underscores the need for more seamless, implicit interventions optimized for workplaces. We examined the feasibility and effects of BreathePulse, a laptop-mounted device that delivers pulsing airflow to the nostrils as an implicit cue, on stress, anxiety, affect, and workload. We found that participants' breathing rates aligned with BreathePulse's guidance across tasks, with the longest maintenance of slow breathing. This work was a collaboration led by Tanzeem Choudhury and her team at Cornell.

IMWUT'24 paper

Haptic Source-effector: Full-body Haptics via Non-invasive Brain Stimulation

Yudai Tanaka, Jacob Serfaty, Pedro Lopes. In Proc. CHI'24 (full paper) and UIST 2024 live demonstration

CHI honorable mention for best paper award (top 5%)

We propose a novel concept for haptics in which one centralized on-body actuator renders haptic effects on multiple body parts by stimulating the brain, i.e., the source of the nervous system—we call this a haptic source-effector, as opposed to the traditional wearables’ approach of attaching one actuator per body part (end-effectors). We implement our concept via transcranial-magnetic-stimulation. Our approach renders ∼15 touch/force-feedback sensations throughout the body (e.g., hands, arms, legs, feet, and jaw—which we found in our user study), all by stimulating the user’s sensorimotor cortex with a single magnetic coil moved mechanically across the scalp.

CHI'24 paper video CHI talk (pre-recorded) CHI talk (live) code

SplitBody: Reducing Mental Workload while Multitasking via Muscle Stimulation

Romain Nith, Yun Ho, Pedro Lopes. In Proc. CHI'24 (full paper)

CHI best paper award (top 1%)

Electrical muscle stimulation (EMS) offers promise in assisting physical tasks by automating movements (e.g., shake a spray can that the user is using). However, these systems improve the performance of a task that users are already focusing on (e.g., users are already focused the spray can). Instead, we investigate whether these muscle stimulation offer benefits when they automate a task that happens in the background of the user’s focus. We found that automating a repetitive movement via EMS can reduce mental workload while users perform parallel tasks (e.g., focusing on writing an essay while EMS stirs a pot of soup).

CHI'24 paper video CHI talk (pre-recorded) CHI talk (live) code Slides

Haptic Permeability: Adding Holes to Tactile Devices Improves Dexterity

Shan-Yuan Teng, Aryan Gupta, Pedro Lopes. In Proc. CHI'24 (full paper)

To minimize encumberment of tactile devices for the fingerpads, researchers moved away from thick actuators (e.g., vibration motors) and, instead, focused on thin electrotactile stimulation. However, we argue just making thin electrodes is not enough and on should also balance how much a tactile device impairs feeling the real world vs. how accurately it delivers virtual sensations. Thus, we propose adding holes to electrotactile devices, which results in: (1) improved tactile perception; and (2) improved force control in grasping. Our approach significantly improves the haptic users’ abilities in dexterous activities, including manipulating tools, in mixed reality.

CHI'24 paper

Stick&Slip: Altering Fingerpad Friction via Liquid Coatings

Alex Mazursky, Jacob Serfaty, and Pedro Lopes. In Proc. CHI'24 (full paper)

We present Stick&Slip, a novel approach that alters friction between the fingerpad & surfaces by depositing liquid droplets that coat the fingerpad—this liquid modifies the finger’s coefficient of friction, ±60% more slippery or sticky. We selected fluids to rapidly evaporate so that the surface returns to its original friction. Unlike traditional friction-feedback, such as electroadhesion or vibration, our approach: (1) alters friction on a wide range of surfaces and geometries, making it possible to modulate nearly any non-absorbent surface; (2) scales to many objects without requiring instrumenting the target surfaces (e.g., with conductive electrode coatings or vibromotors); and (3) both in/decreases friction via a single device.

CHI'24 paper video CHI talk (pre-recorded) CHI talk (live)

Unmaking electronic waste

Jasmine Lu, Pedro Lopes. In Proc. TOCHI'24 (full paper)

E-waste is the largest-growing consumer waste-stream worldwide, but also an issue often ignored. In fact, HCI primarily focuses on designing and understanding interactions during only one segment of a device's lifecycle—while users use them. Researchers overlook a significant space—when devices are no longer “useful” (e.g., breakdown or obsolescence). We argue that HCI can learn from experts who upcycle e-waste for electronics projects, art, and more. To acquire this knowledge, we interviewed experts who unmake e-waste. We explore their practices through the lens of unmaking both when devices are physically unmade and when the perception of e-waste is unmade once waste becomes, once again, useful.

TOCHI'24 paper

Designing Plant-Driven Actuators for Robots to Grow, Age, and Decay

Yuhan Hu, Jasmine Lu, Nathan Scinto-Madonich, Miguel Alfonso Pineros, Pedro Lopes, Guy Hoffman. In Proc. DIS'24 (full paper)

By proposing plant-driven actuators (instead of traditional mechanical motors) we explore a new type of device that grows, ages, and decays. These actuators allow interactive devices, such as robots, to embody these organic qualities in their physical structure. Plant actuators that grow and decay incorporate unpredictable and gradual transformations inherent in living organisms and stand an alternative to the typical design principles of immediacy, responsiveness, control, accuracy, and durability commonly found in robotic design. This work was a collaboration led by Guy Hoffman and his team at Cornell.

DIS'24 paper DIS talk (live)

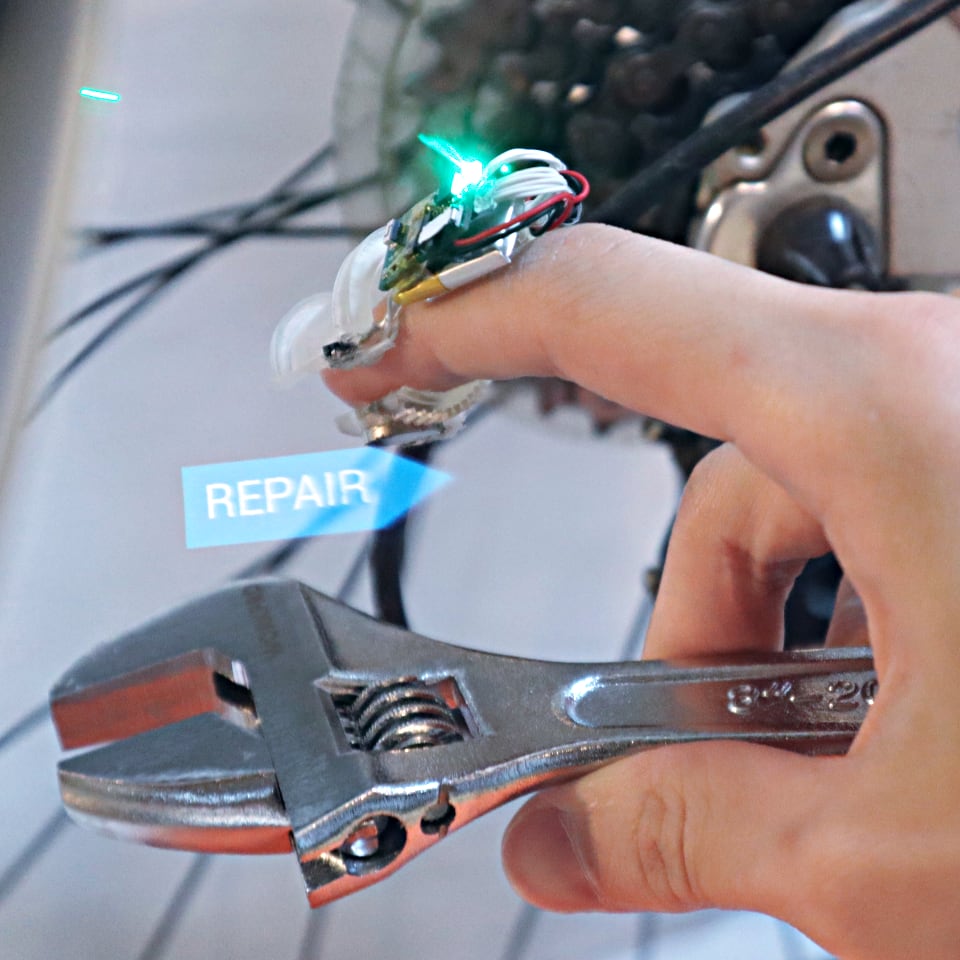

Re-Envisioning the Role of a User in Sustainable Computing

Jasmine Lu, Pedro Lopes. In Proc. IEEE Pervasive Computing'24 (full paper)

In this thought-piece we argue that there are other roles we can take with regards to our technology; essentially, we argue people can be more than just users. To us this is important because as computing becomes pervasive in our lives, more and more e-waste is generated. Exploring new roles for users is thus essential for transitioning toward a more sustainable future in computing. To this end, we argue that the envisioned roles we attribute to users during user-centered design should encompass much more—we can design for user roles such as maintainers, repairers, or even recyclers of interactive devices.

IEEE Pervasive Computing'24 paper

ThermalGrasp: Enabling Thermal Feedback even while Grasping and Walking

Alex Mazursky, Jas Brooks, Beza Desta, Pedro Lopes. In Proc. VR'24 (full paper)

Thermal interfaces typically attach Peltiers, heatsinks and fans directly to the palm or sole, preventing users from grasping or walking. Instead, we present ThermalGrasp, an engineering approach for wearable thermal interfaces that enables users to grab and walk on real objects with minimal obstruction. ThermalGrasp moves Peltiers/cooling to areas not used in grasping or walking (e.g., dorsal hand/foot). We then use thin, compliant materials to conduct heat to/from the palm or sole. Using this approach, a user can, for example, grasp a passive prop (e.g., a stick that acts as a torch in VR), yet feel its thermal state (e.g., hot due to its flame).

VR'24 paper video VR talk video

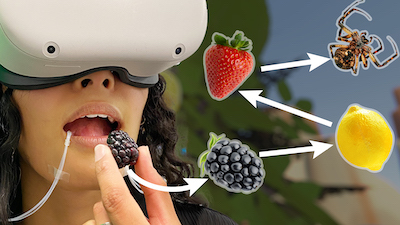

Taste Retargeting via Chemical Taste Modulators

Jas Brooks, Noor Amin, Pedro Lopes. In Proc. UIST'23 (full paper)

UIST Honorable mention award for Demo (Jury's choice)

Taste retargeting selectively changes taste perception using taste modulators—chemicals that temporarily alter the response of taste receptors to foods and beverages. As our technique can deliver droplets of modulators before eating or drinking, it is the first interactive method to selectively alter the basic tastes of real foods without obstructing eating or impacting the food’s consistency. It can be used, for instance, to enable a single food prop to stand in for many virtual foods. For instance, it can transform a pickled blackberry in: a lemon (by decreasing sweetness with lactisole), a strawberry (by transforming sour to sweetness with miraculin), and much more.

ecoEDA: Recycling E-waste During Electronics Design

Jasmine Lu, Beza Desta, K.D. Wu, Romain Nith, Joyce Passananti, Pedro Lopes. In Proc. UIST'23 (full paper)

UIST Honorable mention award

The amount of e-waste generated by discarding devices is enormous. However, inside a discarded device you can find dozens to thousands of reusable components (e.g., microcontrollers, sensors, etc). Despite this, existing electronic design tools assume users will buy all components anew. To tackle this, we propose ecoEDA, an interactive tool that enables electronics designers to explore recycling electronic components during the design process. It accomplishes this by giving suggestions of which components to recycle based on a library of the user's own devices (discarded, found, broken, etc).

UIST'23 paper video code (install it on your KiCAD) UIST talk video

Interactive Benefits from Switching Electrical to Magnetic Muscle Stimulation

Yudai Tanaka, Akifumi Takahashi, Pedro Lopes. In Proc. UIST'23 (full paper)

After 10 years of working on electrical muscle stimulation, we wanted to take a introspective and attempt to uncover any benefits gained by switching from electrical (EMS) to magnetic muscle stimulation (MMS). While much ink has been spilled about the advantages of EMS, not much work has investigated circumventing its key limitations: electrical impulses also cause an uncomfortable "tingling" sensation; EMS relies on pre-gelled electrodes, which require direct contact with the user’s skin, and dry up quickly. To tackle these limitations, we study force-feedback based on magnetic muscle stimulation, which we found to reduce uncomfortable tingling and enable stimulation over the clothes.

UIST'23 paper video code UIST talk video

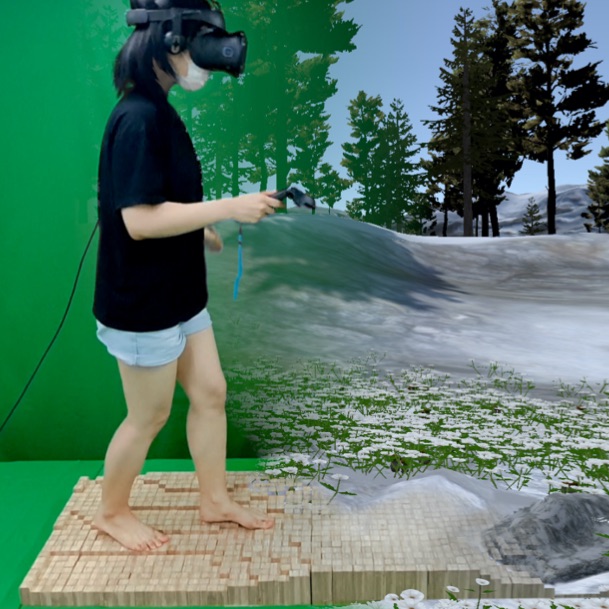

FeetThrough: Electrotactile Foot Interface that Preserves Real-World Sensations

Keigo Ushiyama, Pedro Lopes. In Proc. UIST'23 (full paper)

Most haptic interfaces for the feet use mechanical actuators (e.g., vibration motors), which we argue are not the ideal actuators for the job. Instead, we show that electrotactile stimulation is powerful feet-haptic interface: (1) users feel not only the stimulation but also the terrain under their feet—critical to safely balance on uneven terrains;(2) while a single vibrotactile actuator will also vibrate surrounding skin areas, electrotactile feels more localized; (3) can be applied directly to soles, insoles or socks, enabling new applications such as barefoot interactive experiences (e.g., yoga posture correction) or without the need for special shoes (e.g., guidance for low-vision users).

UIST'23 paper video code UIST talk video

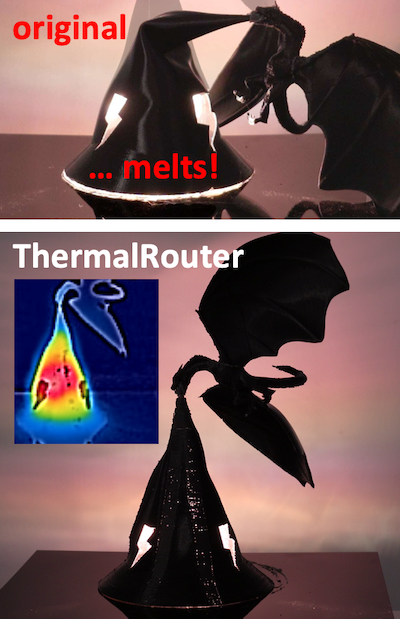

ThermalRouter: Enabling Users to Design Thermally-Sound Devices

Alex Mazursky, Borui Li, Shan-Yuan Teng, Daria Shifrina, Joyce E. Passananti, Svitlana Midianko, Pedro Lopes. In Proc. UIST'23 (full paper)

Users often 3D model enclosures that interact with heat sources (e.g., CPU, motor, lamps, etc.). While parts made by novices might function well aesthetically or structurally, they are rarely thermally-sound. This happens because heat transfer is non-intuitive. To tackle this, we developed ThermalRouter, a CAD plugin that assists with improving the thermal performance of their models. ThermalRouter automatically converts regions of the model to be made from thermally-conductive materials (such as nylon or metallic-silicone). These regions act as heat channels, branching away from hotspots to dissipate heat. The key is that ThermalRouter automatically simulates the thermal performance of many possible heat channel configurations and presents the user with the most thermally-sound design (e.g., lowest temperature).

UIST'23 paper video UIST talk video code

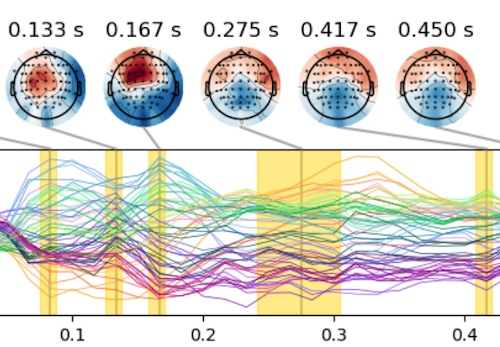

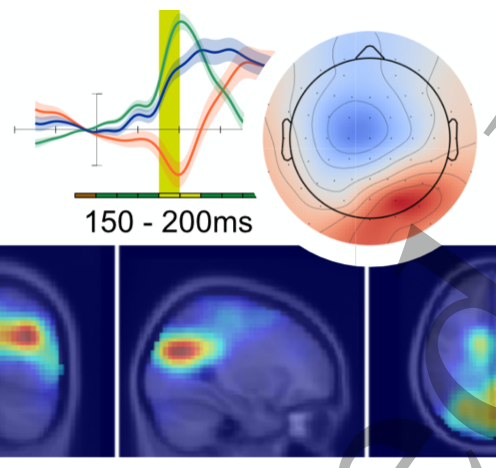

Temporal Dynamics of Brain Activity Predicting Sense of Agency over Muscle Movements

John P. Veillette, Pedro Lopes, Howard C. Nusbaum. In Proc. Journal of Neuroscience'23 (full paper)

We investigate the time course of neural activity that predicts the sense of agency over electrically actuated movements. We find evidence of two distinct neural processes–a transient sequence of patterns that begins in the early sensorineural response to muscle stimulation and a later, sustained signature of agency. This work is part of our lab's exploration on how future interactive devices need be designed to prioritize the user's sense of agency, read more at our project's page (we have six other papers on this topic).

Jneuro'23 paper code

Full-hand Electro-Tactile Feedback without Obstructing Palmar Side of Hand

Yudai Tanaka, Alan Shen, Andy Kong, Pedro Lopes. In Proc. CHI'23 (full paper)

CHI best paper award (top 1%)

This technique renders tactile feedback to the palmar side of the hand while keeping it unobstructed and, thus, preserving manual dexterity. We implement this by applying electro-tactile stimulation without any electrodes on the palmar side, yet that is where tactile sensations are felt. This creates tactile sensations on 11 different places of the palm and fingers while keeping the palms free for dexterous manipulations (e.g., VR with props, AR with tools and more).

CHI'23 paper video CHI talk video hardware files

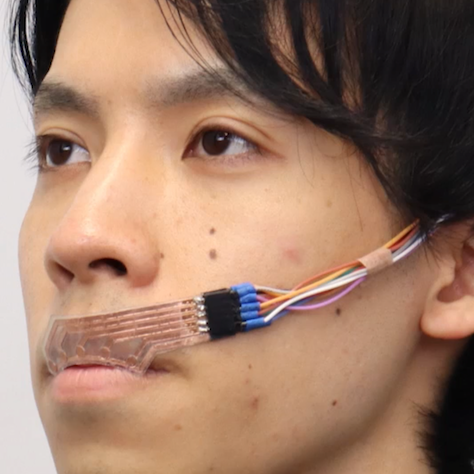

LipIO: Enabling Lips as both Input and Output Surface

Arata Jingu, Yudai Tanaka, Pedro Lopes. In Proc. CHI'23 (full paper)

LipIO enables the lips to be used simultaneously as an input and output surface. It is made from two overlapping flexible electrode arrays: an outward-facing array for capacitive touch and a lip-facing array for electrotactile stimulation. While wearing LipIO, users feel the interface's state via lip stimulation and respond by touching their lip with their tongue or opposing lip. More importantly, LipIO provides co-located tactile feedback that allows users to feel where in the lip they are touching—this is key to enabling eyes- and hands-free interactions. We tend to think of LipIO as an extreme user interface for situations in which the user's eyes, hands and even ears are already occupied with a primary task. We show, for instance, how a user could make use of LipIO to control an interface while biking or while playing guitar.

CHI'23 paper video CHI talk video hardware files

JumpMod: Haptic Backpack that Modifies Users' Perceived Jump

Romain Nith, Jacob Serfaty, Samuel Shatzkin, Alan Shen, Pedro Lopes. In Proc. CHI'23 (full paper)

To enable interactive experiences to feature jump-based haptics without sacrificing wearability, we propose JumpMod, an untethered backpack that modifies one's sense of jumping. JumpMod achieves this by moving a weight up/down along the user's back, which modifies perceived jump momentum—creating accelerated and decelerated jump sensations. In our second study, we empirically found that our device can render five effects: jump higher, land harder/softer, pulled higher/lower. Based on these, we designed four jumping experiences for VR and sports. Finally, in our third study, we found that participants preferred wearing our device in an interactive context, such as one of our jump-based VR applications.

CHI'23 paper video CHI talk (pre-recorded) CHI talk (live) SIGGRAPH (fun demo clip!) hardware

Smell & Paste: Low-Fidelity Prototyping for Olfactory Experiences

Jas Brooks, Pedro Lopes. In Proc. CHI'23 (full paper)

Low-fidelity prototyping is so foundational to Human-Computer Interaction, appearing in most early design phases. So, how do experts prototype olfactory experiences? We interviewed eight experts and found that they do not because no process supports this. Thus, we engineered Smell & Paste, a low-fidelity prototyping toolkit. Designers assemble olfactory proofs-of-concept by pasting scratch-and-sniff stickers onto a paper tape. Then, they test the interaction by advancing the tape in our 3D-printed (or cardboard) cassette, which releases the smells via scratching.

CHI'23 paper video CHI talk video Print your own kit!

Affective Touch as Immediate and Passive Wearable Intervention

Yiran Zhao, Yujie Tao, Grace Le, Rui Maki, Alexander Adams, Pedro Lopes, and Tanzeem Choudhury. In Proc. UbiComp'23 (IMWUT) (full paper)

We investigated affective touch as a new pathway to passively mitigate in-the-moment anxiety. While existing mobile interventions offer great promises for health and well-being, they typically focus on achieving long-term effects such as shifting behaviors--thus, not applicable to give immediate help, e.g., when a user experiences a high anxiety level. To this end, we engineered a wearable device that renders a soft stroking sensation on the user's forearm. Our results showed that participants who received affective touch experienced lower state anxiety and the same physiological stress response level compared to the control group participants. This work was a collaboration led by Tanzeem Choudhury and her team at Cornell.

Ubicomp'23 (IMWUT) paper Ubicomp/IMWUT talk live

Integrating Living Organisms in Devices to Implement Care-based Interactions

Jasmine Lu, Pedro Lopes. In Proc. UIST'22 (full paper)

Finalist, Fast Company Innovation by Design Awards

We explore how embedding a living organism, as a functional component of a device, changes the user-device relationship. In our concept, the user is responsible for providing an environment that the organism can thrive in by caring for the organism. We instantiated this concept as a slime mold integrated smartwatch. The slime mold grows to form an electrical wire that enables a heart rate sensor. The availability of the sensing depends on the slime mold's growth, which the user encourages through care. If the user does not care for the slime mold, it enters a dormant stage and is not conductive. The users can resuscitate it by resuming care.

UIST'22 paper video UIST talk video hardware files Story at NPR's Points North Podcast

Prolonging VR Haptic Experiences by Harvesting Kinetic Energy from the User

Shan-Yuan Teng, K. D. Wu, Jacqueline Chen, Pedro Lopes. In Proc. UIST'22 (full paper)

UIST Honorable mention award

We propose a new technical approach to implement VR haptic devices that contain no battery, yet can render on-demand haptic feedback. The key is that the haptic device charges itself by harvesting the user's kinetic energy (i.e., movement)—even without the user needing to realize this. This is achieved by integrating the energy-harvesting with the virtual experience, in a responsive manner. Whenever our batteryless haptic device is about to lose power, it switches to harvesting mode (by engaging its clutch to a generator) and, simultaneously, the VR headset renders an alternative version of the current experience that depicts resistive forces (e.g., rowing a boat in VR). As a result, the user feels realistic haptics that corresponds to what they should be feeling in VR, while unknowingly charging the device via their movements. Once the supercapacitors are charged, they wake up its microcontroller to communicate with the VR headset. The VR can now use the harvested power for on-demand haptics, including vibration, electrical or mechanical force-feedback; this process can be repeated, ad infinitum.

UIST'22 paper video UIST talk video hardware files

Integrating Real-World Distractions into Virtual Reality

Yujie Tao, Pedro Lopes. In Proc. UIST'22 (full paper)

We explore a new concept, where we directly integrate the distracting stimuli from the user's physical surroundings into their virtual reality experience to enhance presence. Using our approach, an otherwise distracting wind gust can be directly mapped to the sway of trees in a VR experience that already contains trees. Using our novel approach, we demonstrate how to integrate a range of distractive stimuli into the VR experience, such as haptics (temperature, vibrations, touch), sounds, and smells. From the results of three studies, we engineered a sensing module that detects a set of distractive signals during any VR experience (e.g., sounds, winds, and temperature shifts) and responds by triggering pre-made VR sequences that will feel realistic to the user.

UIST'22 paper video UIST talk video open source code

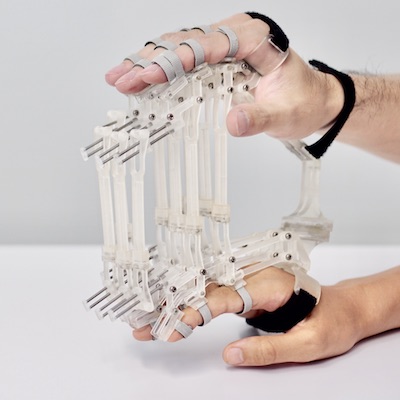

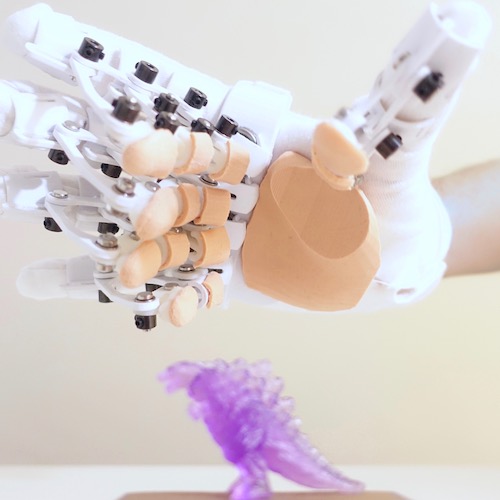

DigituSync: A Dual-User Passive Exoskeleton Glove That Adaptively Shares Hand Gestures

Jun Nishida, Yudai Tanaka, Romain Nith, Pedro Lopes. In Proc. UIST'22 (full paper)

We engineered DigituSync, a passive-exoskeleton that physically links two hands together, enabling two users to adaptively transmit finger movements in real-time. It uses multiple four-bar linkages to transfer both motion and force, while still preserving congruent haptic feedback. Moreover, we implemented a variable-length linkage that allows adjusting the force transmission ratio between the two users and regulates the amount of intervention, which enables users to customize their learning experience. DigituSync's benefits emerge from its passive design: unlike existing haptic devices (motor-based exoskeletons or electrical muscle stimulation), DigituSync has virtually no latency and does not require batteries/electronics to transmit or adjust movements, making it useful and safe to deploy in many settings, such as between students and teachers in a classroom.

UIST'22 paper video UIST talk video make your own exoskeleton (source code)

Electrical Head Actuation: Enabling Interactive Systems to Directly Manipulate Head Orientation

Yudai Tanaka, Jun Nishida, Pedro Lopes. In Proc. CHI'22 (full paper)

CHI best demo award (people's choice)

We propose a novel interface concept in which interactive systems directly manipulate the user's head orientation. We implement this using electrical muscle stimulation (EMS) of the neck muscles, which turns the head around its yaw (left/right) and pitch (up/down) axis. As the first exploration of EMS for head actuation, we characterized which muscles can be robustly actuated and demonstrated how it enables interactions not possible before by building a range of applications, such as (1) synchronizing head orientations of two users, which enables a user to communicate head nods to another user while listening to music, and (2) directly changing the user's head orientation to locate objects in augmented reality.

CHI'22 paper video CHI talk video CHI demo video source code

Integrating Wearable Haptic Interfaces with Real-World Touch Interactions

Pedro Lopes and Yon Visell. A cross-cutting challenges workshop at Haptics Symposium'22

In this workshop, which is available entirely on YouTube, we (Pedro and Yon) invited Mar Gonzalez-Franco, David Parisi, and Allison M. Okamura, to discuss how to integrate haptics in everyday life. This workshop was co-organized/assisted by Shan-Yuan Teng. Wearable tactile technologies for the hand have advanced tremendously in recent years. However, most designs are based on the canonical idea of a haptic glove. While this might be fine for VR, we argue that outside of VR, the additional haptic feedback that is supplied is accompanied by a substantial cost, since areas of the hand with which we often interact with physical objects are covered or obstructed. In this workshop, we discussed how to design for a new generation of devices that can be translated for widespread use in the real world.

Complete workshop/talks on video

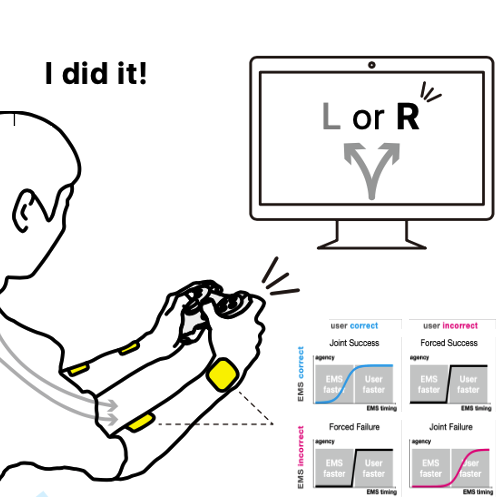

Whose touch is this?: Understanding the Agency Trade-off Between User-driven touch vs. Computer-driven Touch

Daisuke Tajima, Jun Nishida, Pedro Lopes, and Shunichi Kasahara. In Transactions of CHI'21 (full paper)

Force-feedback interfaces actuate the user's to touch involuntarily (using exoskeletons or electrical muscle stimulation); we refer to this as computer-driven touch. Unfortunately, forcing users to touch causes a loss of their sense of agency. While we found that delaying the timing of computer-driven touch preserves agency, they only considered the naive case when user-driven touch is aligned with computer-driven touch. We argue this is unlikely as it assumes we can perfectly predict user-touches. But, what about all the remainder situations: when the haptics forces the user into an outcome they did not intend or assists the user in an outcome they would not achieve alone? We unveil, via an experiment, what happens in these novel situations. From our findings, we synthesize a framework that enables researchers of digital-touch systems to trade-off between haptic-assistance vs. sense-of-agency. Read more at our project's page (we have six other papers on this topic).

TOCHI'21 paper video (presented at CHI'22)

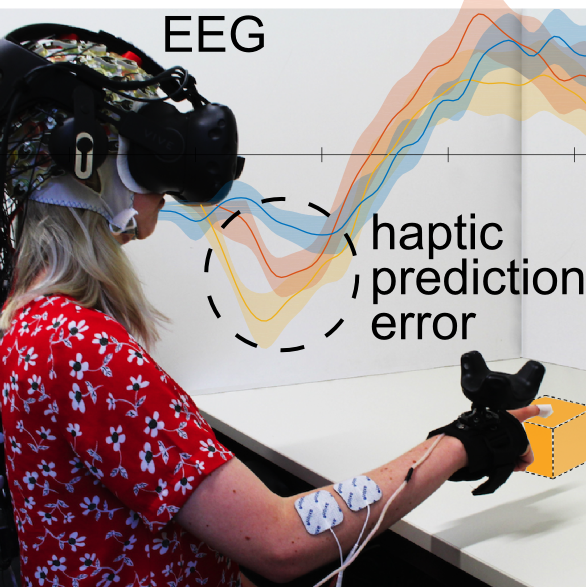

Neural Sources of Prediction Errors Detect Unrealistic VR Interactions

Lukas Gehrke, Pedro Lopes, Marius Klug, Sezen Akman and Klaus Gramann. In Journal of Neural Engineering (full paper)

In VR, designing immersion is one key challenge. Subjective questionnaires are the established metrics to assess the effectiveness of immersive VR simulations. However, administering questionnaires requires breaking the immersive experience they are supposed to assess. We present a complimentary metric based on a ERPs. For the metric to be robust, the neural signal employed must be reliable. Hence, it is beneficial to target the neural signal's cortical origin directly, efficiently separating signal from noise. To test this new complementary metric, we designed a reach-to-tap paradigm in VR to probe EEG and movement adaptation to visuo-haptic glitches. Our working hypothesis was, that these glitches, or violations of the predicted action outcome, may indicate a disrupted user experience. Using prediction error negativity features, we classified VR glitches with ~77% accuracy. This work was a collaboration led by Klaus Gramann and his team at the Neuroscience Department at the TU Berlin.

Journal of Neural Engineering 2022

Chemical Haptics: Rendering Haptic Sensations via Topical Stimulants

Jasmine Lu, Ziwei Liu, Jas Brooks, Pedro Lopes, In Proc. UIST'21 (full paper)

We propose a new class of haptic devices that provide haptic sensations by delivering liquid-stimulants to the user's skin; we call this chemical haptics. Upon absorbing these stimulants, which contain safe and small doses of key active ingredients, receptors in the user's skin are chemically triggered, rendering distinct haptic sensations. We identified five chemicals that can render lasting haptic sensations: tingling (sanshool), numbing (lidocaine), stinging (cinnamaldehyde), warming (capsaicin), and cooling (menthol). To enable the application of our novel approach in a variety of settings (such as VR), we engineered a self-contained wearable that can be worn anywhere on the user's skin (e.g., face, arms, legs).

UIST'21 paper video UIST talk video hardware schematics

Altering Perceived Softness of Real Rigid Objects by Restricting Fingerpad Deformation

Yujie Tao, Shan-Yuan Teng, Pedro Lopes., In Proc. UIST'21 (full paper)

UIST best paper award

UIST best demo award (jury's choice)

We proposed a wearable haptic device that alters perceived softness of everyday objects without instrumenting the object itself. Simultaneously, our device achieves this softness illusion while leaving most of the user's fingerpad free, allowing users to feel the texture of the object they touch. We demonstrate the robustness of this haptic illusion alongside various interactive applications, such as making the same VR prop display different softness states or allowing a rigid 3D printed button to feel soft, like a real rubber button.

UIST'21 paper video UIST talk video hardware schematics

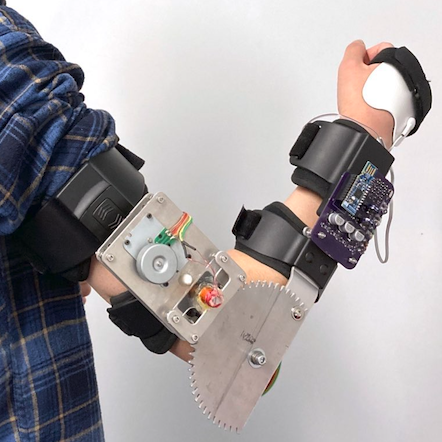

DextrEMS: Increasing Dexterity in Electrical Muscle Stimulation by Combining it with Brakes

Romain Nith, Shan-Yuan Teng, Pengyu Li, Yujie Tao, and Pedro Lopes, In Proc. UIST'21 (full paper)

UIST best demo award (people's choice)

DextrEMS is a haptic device designed to improve the dexterity of electrical muscle stimulation (EMS). It achieves this by combining EMS with a mechanical brake on all finger joints. These brakes allow us to solve two fundamental problems with current EMS devices: lack of independent actuation (i.e., when a target finger is actuated via EMS, it also often causes unwanted movements in other fingers); and unwanted oscillations (i.e., to stop a finger, EMS needs to continuously contract the opposing muscle). Using its brakes, dextrEMS achieves unprecedented dexterity, in both EMS finger flexion and extension, enabling applications not possible with existing EMS-based interactive devices, such as: actuating the user's fingers to pose simple letters in sign language, precise VR force-feedback or even playing the guitar.

UIST'21 paper video UIST talk video hardware schematics

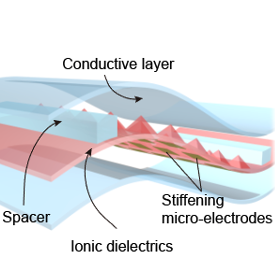

A stretchable and strain-unperturbed pressure sensor for motion-interference-free tactile monitoring on skins

Qi Su, Q. Zou, Yang Li, Yuzhen Chen, Shan-Yuan. Teng, Jane Tunde Kelleher, Romain Nith, Ping Cheng, Nan Li, Wei Liu, Shilei Dai, Youdi Liu, Alex Mazursky, Jie Xu, Lihua Jin, Pedro Lopes, Sihong Wang, In Proc. Science Advances

Existing types of stretchable pressure sensors have an inherent limitation of the interference of the stretching to the pressure sensing accuracy. We present a new design concept for a highly stretchable and highly sensitive pressure sensor that can provide unaltered sensing performance under stretching, which is realized through the creation of locally and biaxially stiffened micro-pyramids made from an ionic elastomer. This work was a collaboration and led by Sihong Wang and his team at the Molecular Engineering Department at the University of Chicago.

ScienceAdvances'21 paper video

Increasing Electrical Muscle Stimulation's Dexterity by means of Back of the Hand Actuation

Akifumi Takahashi, Jas Brooks, Hiroyuki Kajimoto, and Pedro Lopes, In Proc. CHI'21 (full paper)

CHI best paper award (top 1%)

CHI best demo award (people's choice)

We improved the dexterity of the finger flexion produced by interactive devices based on electrical muscle stimulation (EMS). The key to achieve it is that we discovered a new electrode layout in the back of the hand. Instead of the existing EMS electrode placement, which flexes the fingers via the flexor muscles in the forearm, we stimulate the interossei/lumbricals muscles in the palm. Our technique allows EMS to achieve greater dexterity around the metacarpophalangeal joints (MCP), which we demonstrate in a series of applications, such as playing individual piano notes, doing a a two-stroke drum roll or barred guitar frets. These examples were previously impossible with existing EMS electrode layouts.

CHI'21 paper video CHI talk video

Touch&Fold: A Foldable Haptic Actuator for Rendering Touch in Mixed Reality

Shan-Yuan Teng, Pengyu Li, Romain Nith, Joshua Fonseca, and Pedro Lopes, In Proc. CHI'21 (full paper)

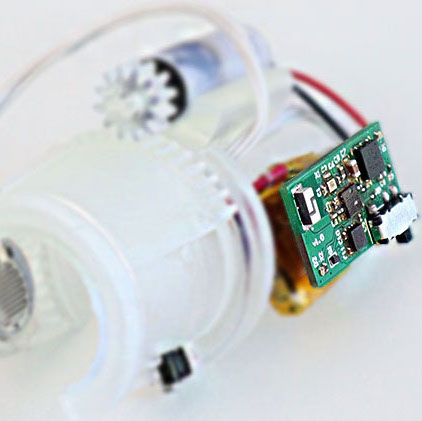

CHI honorable mention for best paper award (top 5%)

We propose a nail-mounted foldable haptic device that provides tactile feedback to mixed reality (MR) environments by pressing against the user's fingerpad when a user touches a virtual object. What is novel in our device is that it quickly tucks away when the user interacts with real-world objects. Its design allows it to fold back on top of the user's nail when not in use, keeping the user's fingerpad free to, for instance, manipulate handheld tools and other objects while in MR. To achieve this, we engineered a wireless and self-contained haptic device, which measures 24×24×41 mm and weighs 9.5 g. Furthermore, our foldable end-effector also features a linear resonant actuator, allowing it to render not only touch contacts (i.e., pressure) but also textures (i.e., vibrations).

CHI'21 paper video CHI talk video hardware schematics

MagnetIO: Passive yet Interactive Soft Haptic Patches Anywhere

Alex Mazursky, Shan-Yuan Teng, Romain Nith, and Pedro Lopes, In Proc. CHI'21 (full paper)

We propose a new type of haptic actuator, which we call MagnetIO, that is comprised of two parts: any number of soft interactive patches that can be applied anywhere and one battery-powered voice-coil worn on the user's fingernail. When the fingernail-worn device contacts any of the interactive patches it detects its magnetic signature and makes the patch vibrate. To allow these otherwise passive patches to vibrate, we make them from silicone with regions doped with neodymium powder, resulting in soft and stretchable magnets. This novel decoupling of traditional vibration motors allows users to add interactive patches to their surroundings by attaching them to walls, objects or even other devices or appliances without instrumenting the object with electronics.

CHI'21 paper video CHI talk video hardware schematics

Stereo-Smell via Electrical Trigeminal Stimulation

Jas Brooks, Shan-Yuan Teng, Jingxuan Wen, Romain Nith, Jun Nishida, and Pedro Lopes, In Proc. CHI'21 (full paper)

Honorable Mention, Fast Company Innovation by Design Awards

We engineered a device that creates a stereo-smell experience, i.e., directional information about the location of an odor, by rendering the readings of external odor sensors as trigeminal sensations using electrical stimulation of the user's nasal septum. The key is that the sensations from the trigeminal nerve, which arise from nerve-endings in the nose, are perceptually fused with those of the olfactory bulb (the brain region that senses smells). We use this sensation to then allow participants to track a virtual smell source in a room without any previous training.

CHI'21 paper video CHI talk video hardware schematics

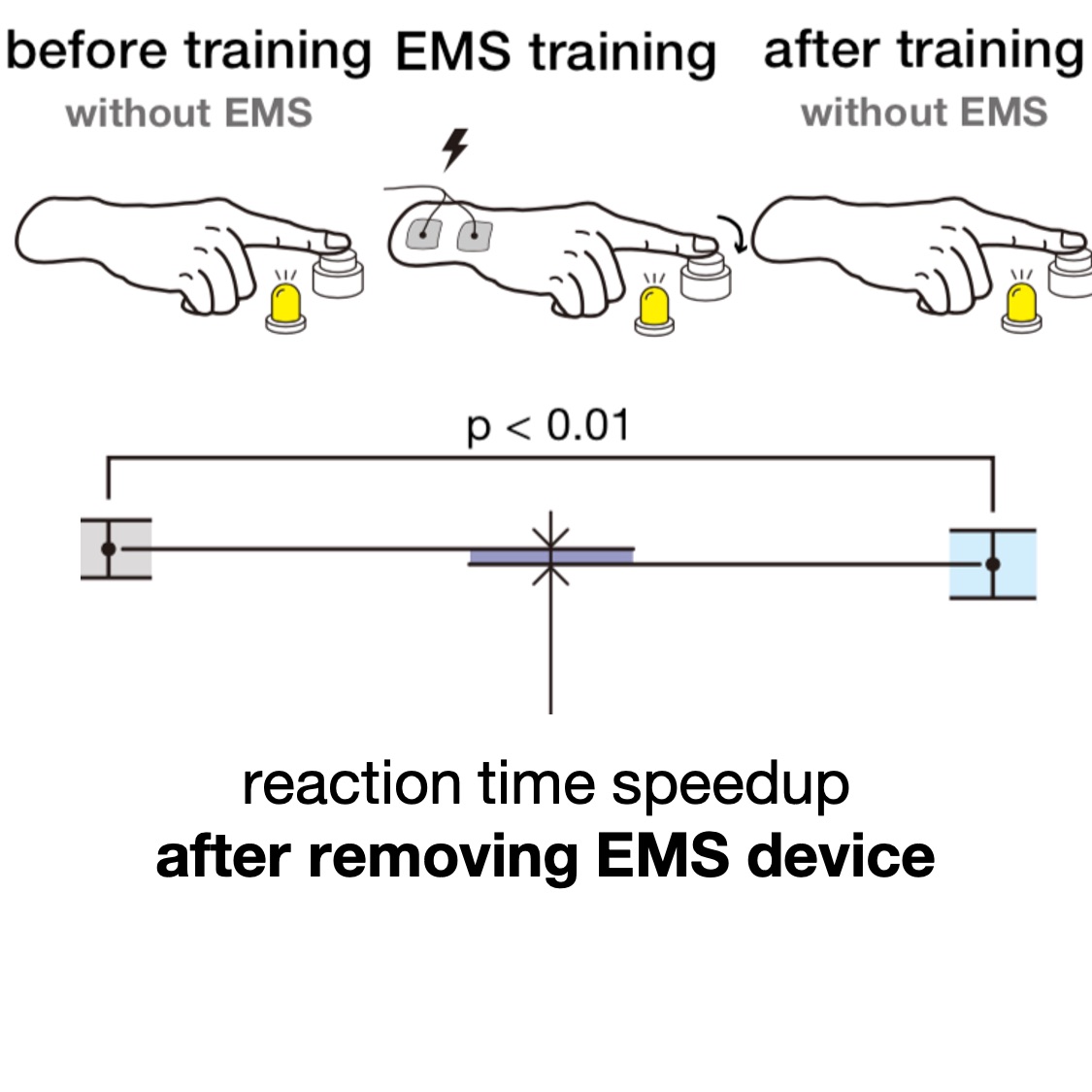

Preserving Agency During Electrical Muscle Stimulation Training Speeds up Reaction Time Directly Afer Removing EMS

Shunichi Kasahara, Kazuma Takada, Jun Nishida, Kazuhisa Shibata, Shinsuke Shimojo and Pedro Lopes, In Proc. CHI'21 (full paper)

We found out that after training for reaction time tasks with electrical muscle stimulation (EMS), one's reaction time is accelerated even after you remove the EMS device. What is remarkable is that the key to the optimal speedup is not applying EMS as soon as possible (traditional view on EMS stimulus timing) but to delay the EMS stimulus closer to the user's own reaction time, but still deliver the stimulus faster than humanly possible; this preserves some of user's sense of agency while still accelerating them to superhuman speeds (we call this Preemptive Action). This was done in cooperation with our colleagues from Sony CSL, RIKEN Center for Brain Science, and CalTech. Read more at our project's page.

CHI'21 paper video CHI talk video source code

User Authentication via Electrical Muscle Stimulation

Yuxin Chen, Zhuolin Yang, Ruben Abbou, Pedro Lopes, Ben Y. Zhao and Haitao Zheng, In Proc. CHI'21 (full paper)

We propose a novel modality for active biometric authentication: electrical muscle stimulation (EMS). To explore this, we engineered ElectricAuth, a wearable that stimulates the user's forearm muscles with a sequence of electrical impulses (i.e., EMS challenge) and measures the user's involuntary finger movements (i.e., response to the challenge). ElectricAuth leverages EMS' intersubject variability, where the same electrical stimulation results in different movements in different users because everybody's physiology is unique (e.g., differences in bone and muscular structure, skin resistance and composition, etc.). As such, ElectricAuth allows users to login without memorizing passwords or PINs. This is work was a colaboration led by Heather Zheng (who runs the SAND Lab) at UChicago.

CHI'21 paper video CHI talk video code

Elevate: A Walkable Pin-Array for Large Shape-Changing Terrains

Seungwoo Je, Hyunseung Lim, Kongpyung Moon, Shan-Yuan Teng, Jas Brooks, Pedro Lopes, and Andrea Bianchi, In Proc. CHI'21 (full paper)

Existing shape-changing floors are limited by their tabletop scale or the coarse resolution of the terrains they can display due to the limited number of actuators and low vertical resolution. To tackle this, we engineered Elevate, a dynamic and walkable pin-array floor on which users can experience not only large variations in shapes but also the details of the underlying terrain. Our system achieves this by packing 1200 pins arranged on a 1.80 x 0.60m platform, in which each pin can be actuated to one of ten height levels (resolution: 15mm/level). This work was a collaboration and was led by Andrea Bianchi, who runs the MAKinteract group at KAIST.

CHI'21 paper video CHI talk video

HandMorph: a Passive Exoskeleton that Miniaturizes Grasp

Jun Nishida, Soichiro Matsuda, Hiroshi Matsui, Shan-Yuan Teng, Ziwei Liu, Kenji Suzuki, Pedro Lopes, In Proc. UIST'20 (full paper)

UIST best paper award

We engineered HandMorph, an exoskeleton that approximates the experience of having a smaller grasping range. It uses mechanical links to transmit motion from the wearer's fingers to a smaller hand with five anatomically correct fingers. The result is that HandMorph miniaturizes a wearer's grasping range while transmitting haptic feedback. Unlike other size-illusions based on virtual reality, HandMorph achieves this in the user's real environment, preserving the user's physical and social contexts. As such, our device can be integrated into the user's workflow, e.g., to allow product designers to momentarily change their grasping range into that of a child while evaluating a toy prototype.

UIST'20 paper video UIST talk video 3D files (print your exoskeleton)

Trigeminal-based Temperature Illusions

Jas Brooks, Steven Nagels, Pedro Lopes, In Proc. CHI'20 (full paper) CHI best paper award (top 1%)

We explore a temperature illusion that uses low-powered electronics and enables the miniaturization of simple warm and cool sensations. Our illusion relies on the properties of certain scents, such as the coolness of mint or hotness of peppers. These odors trigger not only the olfactory bulb, but also the nose's trigeminal nerve, which has receptors that respond to both temperature and chemicals. To exploit this, we engineered a wearable device that emits up to three custom-made “thermal” scents directly to the user's nose. Breathing in these scents causes the user to feel warmer or cooler.

CHI'20 paper video CHI talk video hardware schematics

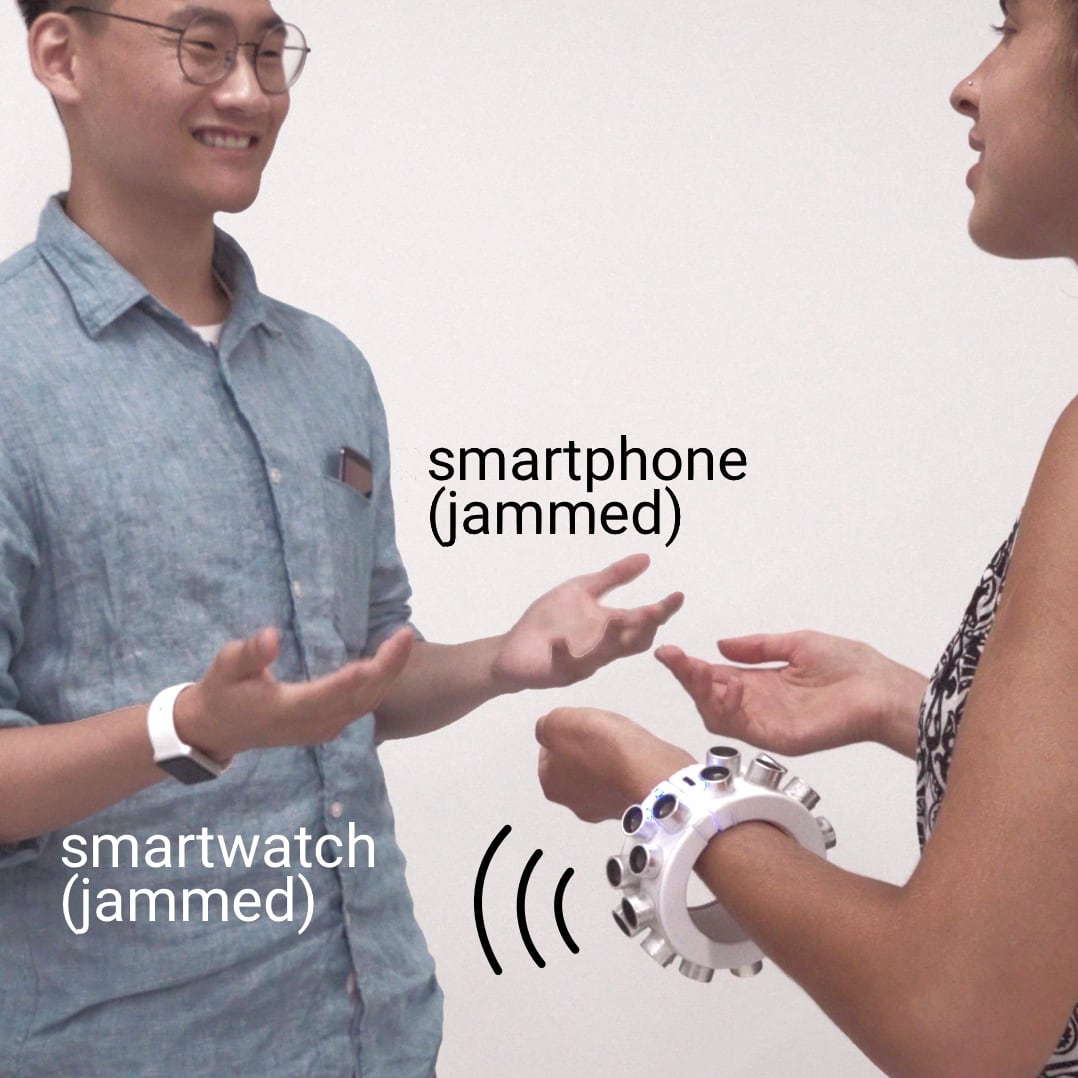

Wearable Microphone Jamming

Yuxin Chen*, Huiying Li∗, Shan-Yuan Teng∗, Steven Nagels, Pedro Lopes, Ben Y. Zhao and Heather Zheng, In Proc. CHI'20 (full paper)

* authors contributed equally CHI honorable mention for best paper award (top 5%)

We engineered a wearable microphone jammer that is capable of disabling microphones in its user's surroundings, including hidden microphones. Our device is based on a recent exploit that leverages the fact that when exposed to ultrasonic noise, commodity microphones will leak the noise into the audible range. Our jammer is more efficient than stationary jammers. This is work was a collaboration led by Heather Zheng (who runs the SAND Lab) at UChicago.

CHI'20 paper video CHI talk video code/hardware

Next Steps in Human Computer Integration

Floyd Mueller* ,Pedro Lopes*, Paul Strohmeier, Wendy Ju, Caitlyn Seim, Martin Weigel, Suranga Nanayakkara, Marianna Obrist, Zhuying Li, Joseph Delfa, Jun Nishida, Elizabeth Gerber, Dag Svanaes, Jonathan Grudin, Stefan Greuter, Kai Kunze, Thomas Erickson, Steven Greenspan, Masahiko Inami, Joe Marshall, Harald Reiterer, Katrin Wolf, Jochen Meyer, Thecla Schiphorst, Dakuo Wang, Pattie Maes. In Proc. CHI'20 (full paper) * authors contributted equally

Human-computer integration (HInt) is an emerging paradigm in which computational and human systems are closely interwoven; with rapid technological advancements and growing implications, it is critical to identify an agenda for future research in HInt.

CHI'20 paper CHI talk video

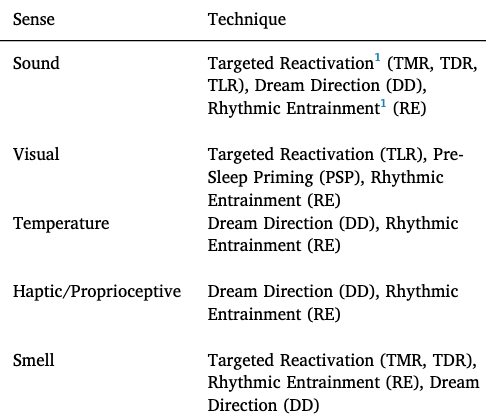

Dream engineering: Simulating worlds through sensory stimulation

Michelle Carr, Adam Haar*, Judith Amores*, Pedro Lopes*, Guillermo Bernal, Tomás Vega, Oscar Rosello, Abhinandan Jain, Pattie Maes. In Proc. Consciousness and Cognition (Vol. 83, 2020) (journal paper) * authors contributed equally

We draw a parallel between recent VR haptic/sensory devices to further stimulate more senses for virtual interactions and the work of sleep/dream researchers, who are exploring how senses are integrated and influence the sleeping mind. We survey recent developments in HCI technologies and analyze which might provide a useful hardware platform to manipulate dream content by sensory manipulation, i.e., to engineer dreams. This work was led by Michelle Carr (University of Rochester) and in collaboration with the Fluid Interfaces group (MIT Media Lab).

Consciousness and Cognition'20 paper

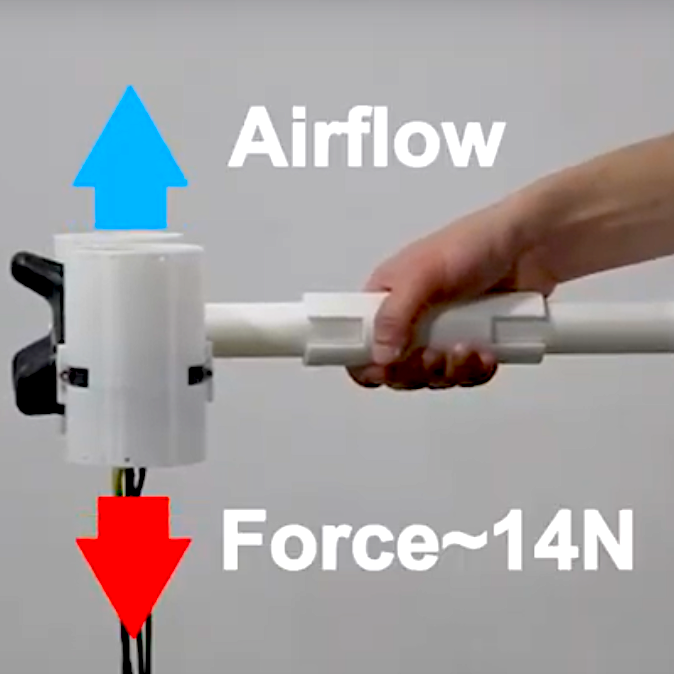

Aero-plane: a Handheld Force-Feedback Device that Renders Weight Motion Illusion

Seungwoo Je, Myung Jin Kim, Woojin Lee, Byungjoo Lee, Xing-Dong Yang, Pedro Lopes, Andrea Bianchi. In Proc. UIST'19 (full paper)

We engineered Aero-plane, a force-feedback handheld controller based on two miniature jet-propellers that can render shifting weights of up to 14 N within 0.3 seconds. Unlike other ungrounded haptic devices, our prototype realistically simulates weight changes over 2D surfaces. This work was a collaboration and was led by Andrea Bianchi, who runs the MAKinteract group at KAIST.

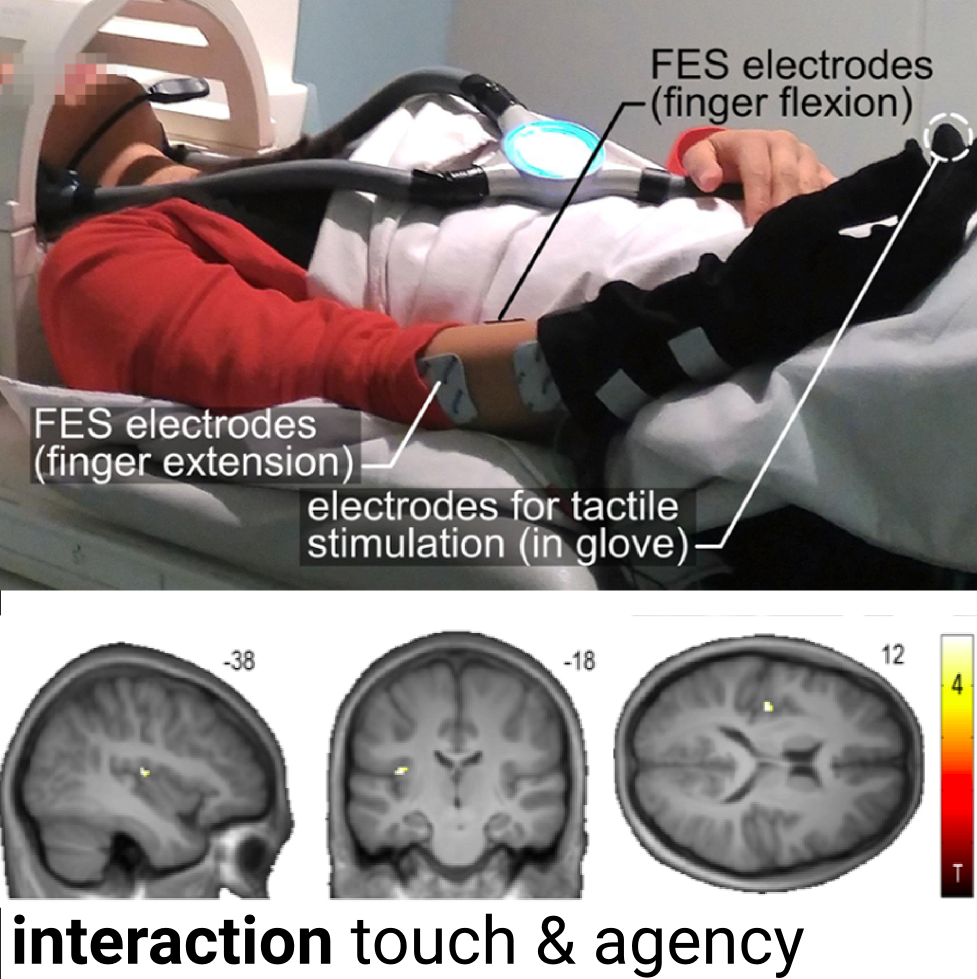

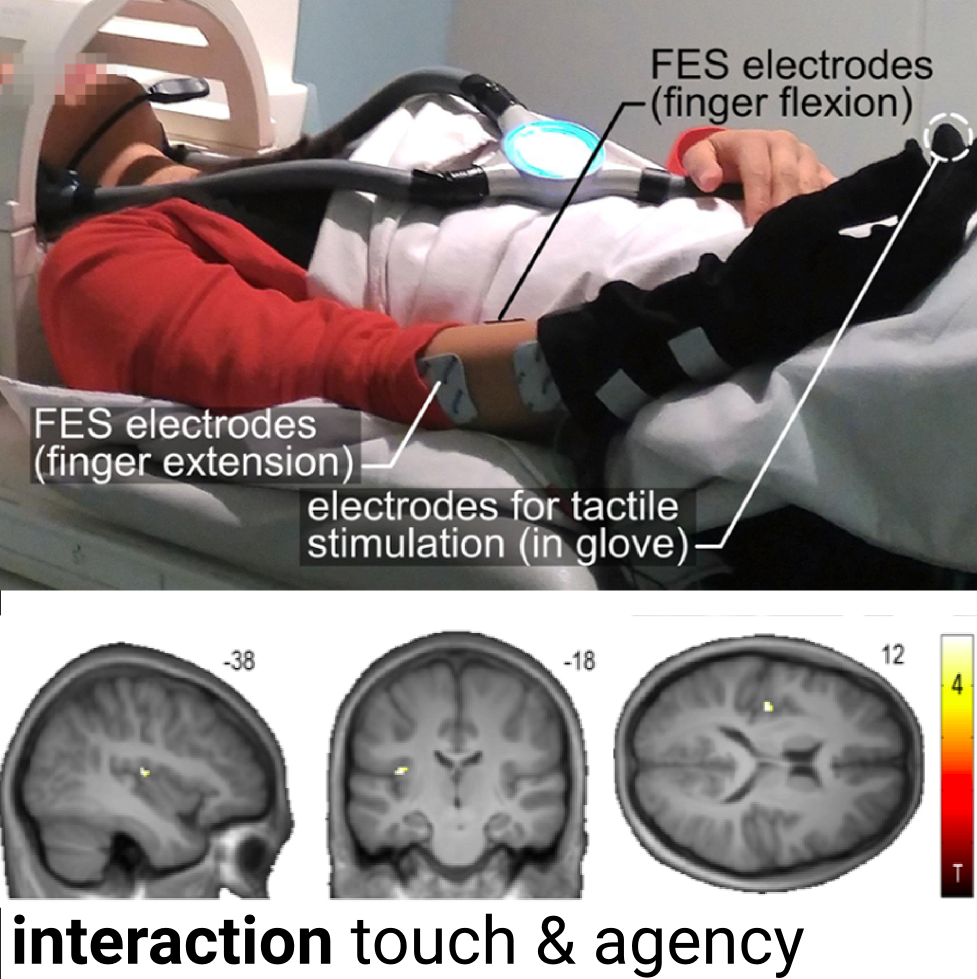

Action-dependent processing of touch in the human parietal operculum

Jakub Limanowski, Pedro Lopes, Janis Keck, Patrick Baudisch, Karl Friston, and Felix Blankenburg. In Cerebral Cortex (journal)

Tactile input generated by one's own agency is generally attenuated. Conversely, externally caused tactile input is enhanced; e.g., during haptic exploration. We used functional magnetic resonance imaging (fMRI) to understand how the brain accomplishes this weighting. Our results suggest an agency-dependent somatosensory processing in the parietal operculum. Read more at our project's page.

Preemptive Action: Accelerating Human Reaction using Electrical Muscle Stimulation Without Compromising Agency

Shunichi Kasahara, Jun Nishida and Pedro Lopes. In Proc. CHI'19, Paper 643 (full paper) and demonstration at SIGGRAPH'19 eTech.

Grand Prize, awarded by Laval Virtual in partnership with SIGGRAPH'19 eTech.We found out that it is possible to optimize the timing of haptic systems to accelerate human reaction time without fully compromising the user' sense of agency. This work was done in cooperation with Shunichi Kasahara from Sony CSL. Read more at our project's page.

CHI'19 paper video SIGGRAPH'19 etech CHI'19 talk (slides) CHI talk video

Detecting Visuo-Haptic Mismatches in Virtual Reality using the Prediction Error Negativity of Event-Related Brain Potentials

Lukas Gehrke, Sezen Akman, Pedro Lopes, Albert Chen, ..., Klaus, Gramann. In Proc. CHI'19, Paper 427. (full paper)

We detect visuo-haptic mismatches in VR by analyzing the user's event-related potentials (ERP). In our EEG study, participants touched VR objects and received either no haptics, vibration, or vibration and EMS. We found that the negativity component (prediction error) was more pronounced in unrealistic VR situations, indicating visuo-haptic mismatches. Read more at our project's page.

Adding Force Feedback to Mixed Reality Experiences and Games using Electrical Muscle Stimulation

Pedro Lopes, Sijing You, Alexandra Ion, and Patrick Baudisch. In Proc. CHI'18. (full paper)

Summary: We present a mobile system that enhances mixed reality experiences, displayed on a Microsoft HoloLens, with force feedback by means of electrical muscle stimulation (EMS). The benefit of our approach is that it adds physical forces while keeping the users' hands free to interact unencumbered—not only with virtual objects, but also with physical objects, such as props and appliances that are an integral part of both virtual and real worlds.

Providing Haptics to Walls and Other Heavy Objects in Virtual Reality by Means of Electrical Muscle Stimulation

Pedro Lopes, Sijing You, Alexandra Ion, and Patrick Baudisch. In Proc. CHI'17 (full paper) and demonstration at SIGGRAPH'17 studios

We explored how to add haptics to walls and other heavy objects in virtual reality. Our contribution is that we prevent the user's hands from penetrating virtual objects by means of electrical muscle stimulation (EMS). As the shown user lifts a virtual cube, our system lets the user feel the weight and resistance of the cube. The heavier the cube and the harder the user presses the cube, the stronger a counterforce the system generates.

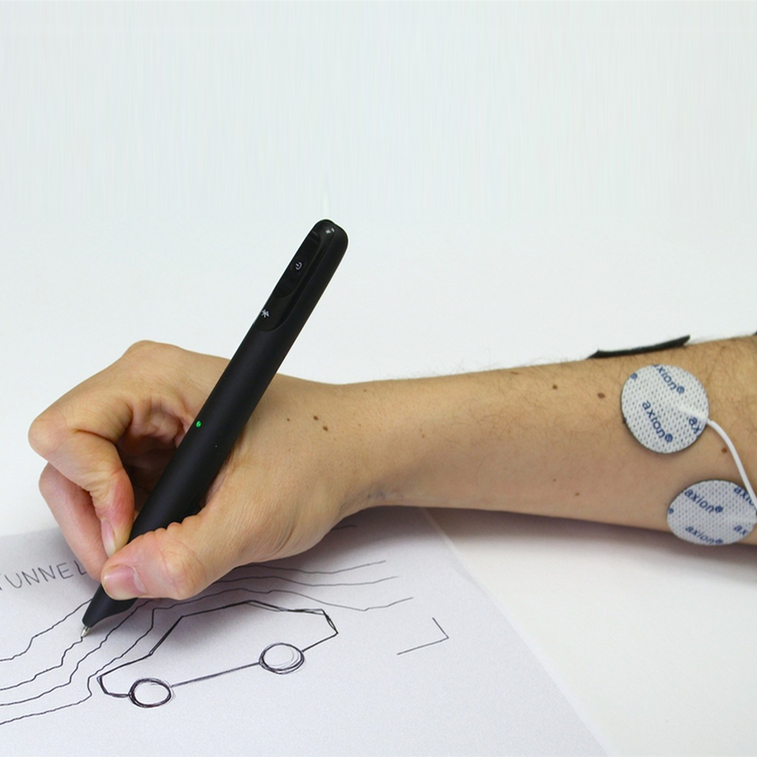

Muscle-plotter: An Interactive System based on Electrical Muscle Stimulation that Produces Spatial Output

Pedro Lopes, Doga Yueksel, François Guimbretière, and Patrick Baudisch. In Proc. UIST'16 (full paper).

We explore how to create more expressive EMS-based systems. Muscle-plotter achieves this by persisting EMS output, allowing the system to build up a larger whole. More specifically, it spreads out the 1D signal produced by EMS over a 2D surface by steering the user's wrist. Rather than repeatedly updating a single value, this renders many values into curves.

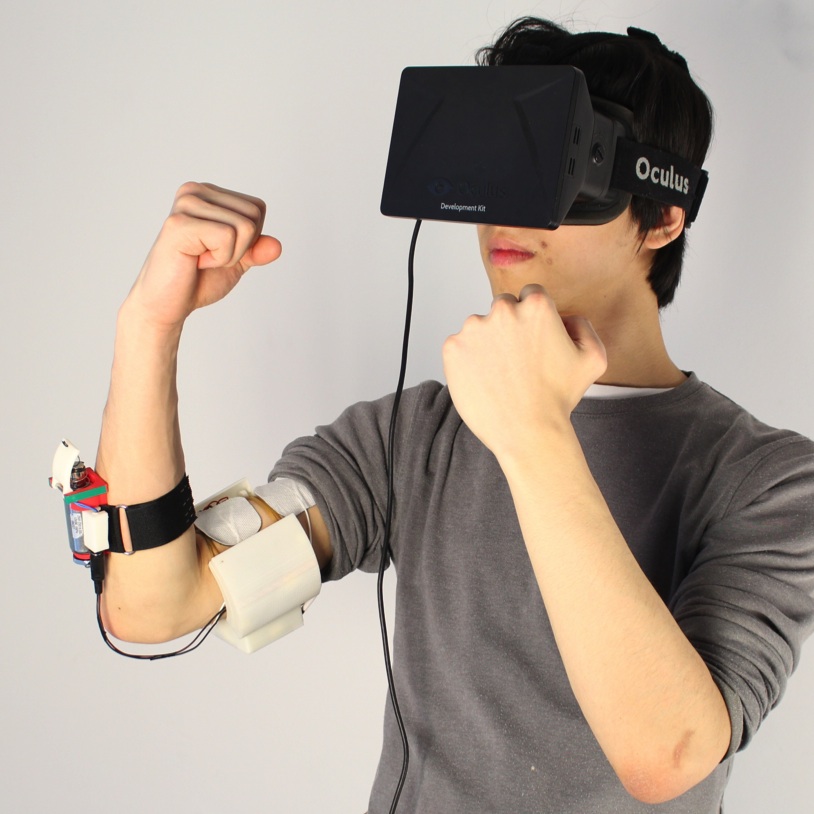

Impacto: Simulating Physical Impact by Combining Tactile Stimulation with Electrical Muscle Stimulation

Pedro Lopes, Alexandra Ion, and Patrick Baudisch. In Proc. UIST'15 (full paper). UIST best demo nomination

We present impacto, a device designed to render the haptic sensation of hitting and being hit in virtual reality. The key idea that allows the small and light impacto device to simulate a strong hit is that it decomposes the stimulus: it renders the tactile aspect of being hit by tapping the skin using a solenoid; it adds impulse to the hit by thrusting the user's arm backwards using electrical muscle stimulation. The device is self-contained, wireless, and small enough for wearable use.

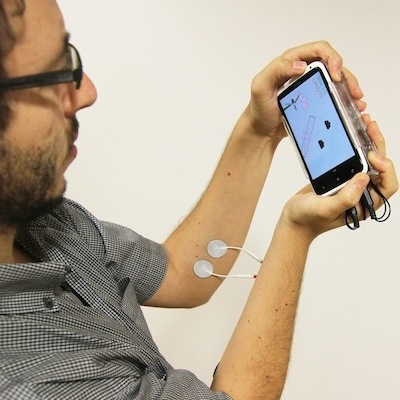

Affordance++: Allowing Objects to Communicate Dynamic Use

Pedro Lopes, Patrik Jonell, and Patrick Baudisch. In Proc. CHI'15 (full paper). CHI best paper award (top 1%)

We propose extending the affordance of objects by allowing them to communicate dynamic use, such as (1) motion (e.g., spray can shakes when touched), (2) multi-step processes (e.g., spray can sprays only after shaking), and (3) behaviors that change over time (e.g., empty spray can does not allow spraying anymore). Rather than enhancing objects directly, however, we implement this concept by enhancing the user with electrical muscle stimulation. We call this affordance++.

Proprioceptive Interaction

Pedro Lopes, Alexandra Ion, Willi Mueller, Daniel Hoffmann, Patrik Jonell, and Patrick Baudisch. In Proc. CHI'15 (full paper). CHI best talk award

We propose a new way of eyes-free interaction for wearables. It is based on the user's proprioceptive sense, i.e., users feel the pose of their own body. We have implemented a wearable device, Pose-IO, that offers input and output based on proprioception. Users communicate with Pose-IO through the pose of their wrists. Users enter information by performing an input gesture by flexing their wrist, which the device senses using an accelerometer. Users receive output from Pose-IO by finding their wrist posed in an output gesture, which Pose-IO actuates using electrical muscle stimulation.

Muscle-propelled force feedback: bringing force feedback to mobile devices

Pedro Lopes and Patrick Baudisch. In Proc. CHI'13 (short paper). IEEE World Haptics, People's Choice Nomination for Best Demo

Force feedback devices resist miniaturization, because they require physical motors and mechanics. We propose mobile force feedback by eliminating motors and instead actuating the user's muscles using electrical stimulation. Without the motors, we obtain substantially smaller and more energy-efficient devices. Our prototype fits on the back of a mobile phone. It actuates users' forearm muscles via four electrodes, which causes users' muscles to contract involuntarily, so that they tilt the device sideways. As users resist this motion using their other arm, they perceive force feedback.

The publications above are core to our lab's mission. If you are interested more of Pedro's publications in other topics, see here.

Teaching by HCI lab (active classes)

These three classes run in a sequence (Fall, Winter, Spring) and allow you to get our Undergraduate Specialization in HCI with expertise on hands-on hardware (e.g., Arduino, electronics, DIY, sensors, actuators, wearables, etc). These are best taken one after the other, since the final CMSC 23230 requires you to have taken a hardware or physics class before. Students graduating from these classes in the past have used their expertise for professional opportunities at Microsoft (AR/VR), Apple (e.g., hardware security), video-game industry (e.g., EA, Riot Games, etc), user-facing software companies (e.g., Amazon, Google), and competitive CS/HCI graduate schools (e.g., CMU, Princeton, UW, UChicago).

1. Introduction to Human-Computer Interaction (CMSC 20300; )

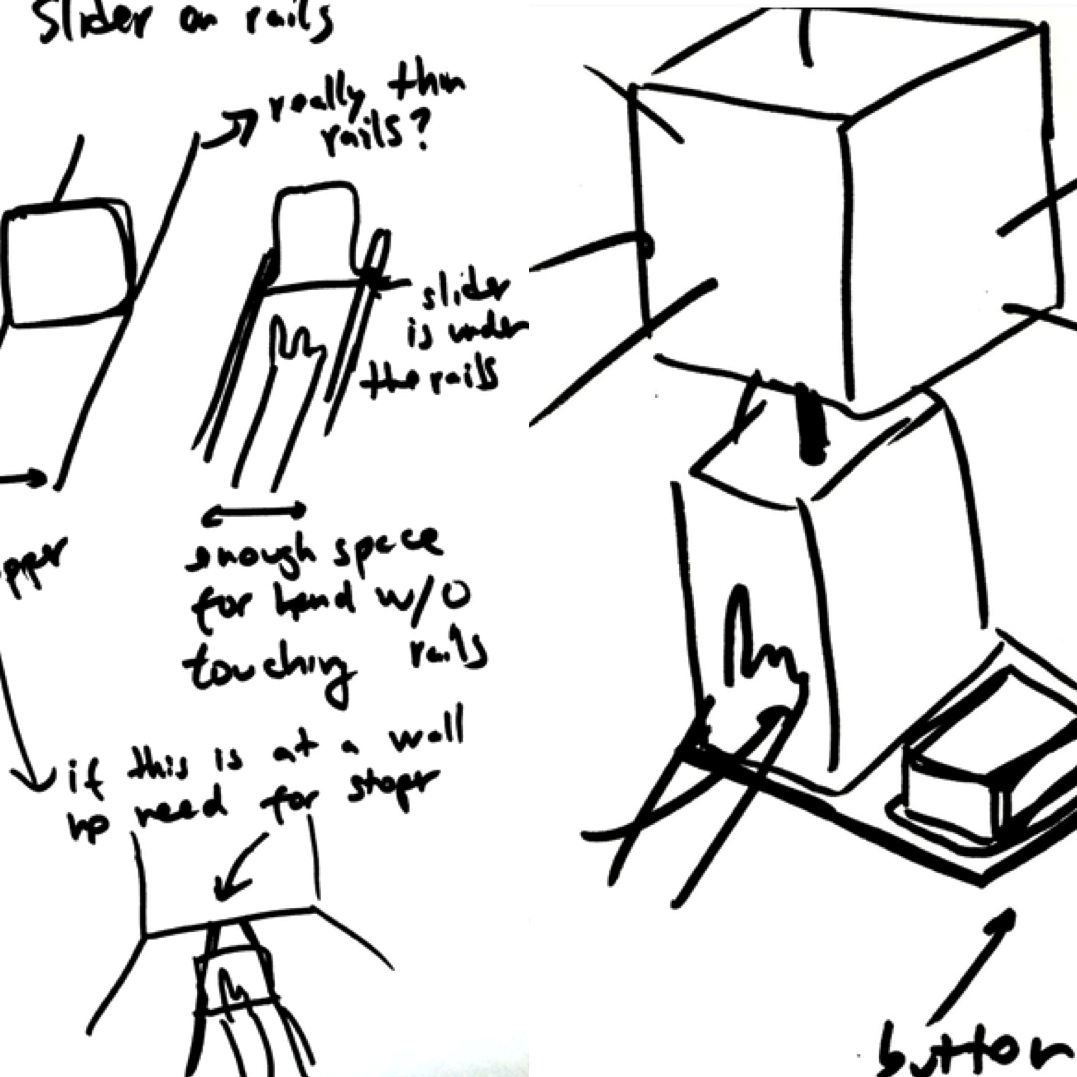

Synopsis: An introduction to the field of Human-Computer Interaction (HCI), with an emphasis in understanding, designing and programming user-facing software and hardware systems. This class covers the core concepts of HCI: affordances, mental models, selection techniques (pointing, touch, menus, text entry, widgets, etc), conducting user studies (psychophysics, basic statistics, etc), rapid prototyping (3D printing, etc), and the fundamentals of 3D interfaces (optics for VR, AR, etc). We compliment the lectures with weekly programming assignments and two larger projects, in which we build/program/test user-facing interactive systems. See here for class website. (This class is required for our Undergraduate Specialization in HCI, see here for details.)

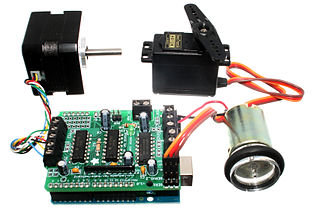

2. Inventing, Engineering and Understanding Interactive Devices (CMSC 23220; )

Synopsis: In this class we build I/O devices, typically wearable or haptic devices. These are user-facing hardware devices engineered to enable new ways to interact with computers. In order for you to be successful in building your own I/O device we will: (1) study and program 8 bit microcontrollers, (2) explore different analog and digital sensors and actuators, (3) write control loops and filters; (4) learn how to design simple bit-level protocols for Bluetooth communication. We compliment the lectures with labs, weekly programming/circuit-building assignments and one larger project, in which we build a complete circuit for a standalone wearable device. See here for class website. (This class is part of our Undergraduate Specialization in HCI, see here for details.)

3. Engineering Interactive Electronics onto Printed Circuit Boards (CMSC 23230 and CMSC 33230; )

Synopsis: In our "PCB class" we will engineer electronics from scratch onto Printed Circuit Boards (PCBs). We focus on designing and laying out the circuit and PCB for our own custom-made I/O devices, such as wearable or haptic devices. In order for you to be successful in engineering a functional PCB we will: (1) review digital circuits and three microcontrollers (ATMEGA, NRF, SAMD), (2) use KICAD to build circuit schematics; (3) learn how to wire analog/digital sensors or actuators to our microcontroller, including via SPI and I2C protocols; (4) use KICAD to build PCB schematics; (5) manufacture our designs in a real factory; (6) receive in our hands the PCBs we sent to the factory; (7) finally, learn how to debug our custom-made PCBs. This class is the advanced version of CMSC 23220; while it is possible to take it without taking 23220, we do not recommend it unless you have already some experience with microcontroller programming, breadboarding and simple circuit design. We compliment the lectures with weekly labs, weekly circuit design assignments and one larger project, in which we design a complete PCB from scratch that is manufactured in a factory. See here for class website. (This class is part of our Undergraduate Specialization in HCI, see here for details. Finally, this class is a systems class and it covers a lot of ground in bit-based protocol design, low-level hardware, bootloaders and more.)

While we do our best to increase our classes' capacity, our HCI classes fill up quickly. If that happens and you still want to register, please use the CS Waiting list.

Classes offered on special quarters

4. Emerging Interface Technologies (CMSC 33240 and CMSC 23240; next: stay tuned!)

Synopsis: In this class, we examine emergent technologies that might impact the future generations of computing interfaces, these include: physiological I/O (e.g., brain and muscle computer interfaces), tangible computing (giving shape and form to interfaces), wearable computing (I/O devices closer to the user's body), rendering new realities (e.g., virtual and augmented reality) and haptics (giving computers the ability to generate touch and forces). (Note that This class supersede our former "HCI Topics" graduate seminar, this is a hands-on class with more projects and assignments, not a typical graduate seminar). See here for class website. (This class is part of our Undergraduate Specialization in HCI, see here for details.)

5. Human-Computer Interaction and Neuroscience (CMSC 33231-1; Next: stay tuned!)

Synopsis: In this class we examine the field of HCI using Neuroscience as a lens to generate ideas. This is an advanced graduate level seminar that assumes expertise in HCI (e.g., especially in haptics and human actuation) and in basic neuroscience (e.g., sensory systems). The class is based on mini-challenges, paper discussion, interactions with neuroscience experts on-campus and paper writing/experiment design.

Co-designed classes and past courses

6. Creative Machines (PHYS 21400; CMSC 21400; ASTR 31400, PSMS 31400, CHEM 21400, ASTR 21400)

Note: This class is taught by Stephan Meyer (Astrophysics), Scott Wakely (Physics), Erik Shirokoff (Astrophysics). While this class is not taught by Pedro Lopes, it was co-created by Pedro together with Scott Wakely (Physics), Stephan Meyer (Astrophysics), Aaron Dinner (Chemistry), Benjamin Stillwell and Zack Siegel. We highly recommend students interested in HCI or in working with us to take this class. Synopsis: Techniques for building creative machines is essential for a range of fields from the physical sciences to the arts. In this hands-on course, you will engineer and build functional devices, e.g., mechanical design and machining, CAD, rapid prototyping, and circuitry; no previous experience is expected. Open to undergraduates in all majors, Master's or Ph.D. students.

Synopsis: In this class, we examine review developments in HCI technologies that might impact the future generations of computing interfaces, these include: physiological I/O (e.g., brain and muscle computer interfaces), tangible computing (giving shape and form to interfaces), wearable computing (I/O devices closer to the user's body), rendering new realities (e.g., virtual and augmented reality) and haptics (giving computers the ability to generate touch and forces). This is a graduate seminar with emphasis on paper reading, discussing and paper writing. (This class is paused, we recommend you take the more hands-on Emergent Technologies instead.)

Selected alumni

Prof. Jun Nishida

Postdoc 2019-22

After: Prof. UMaryland

Prof. Jas Brooks

PhD 2019-2025

After: Prof. MIT

Prof. Shan-Yuan Teng

PhD 2019-2025

After: Prof. NTU

Prof. Akifumi Takahashi

Postdoc 2022-24

After: Prof. Tohoku University

Dr. Alex Mazursky

PhD 2020-2025

After: Apple (Haptics)

Yujie Tao

Master 2021-22

After: Stanford PhD

Joyce Passananti

Ugrad 2022

After: UW PhD

Beza Desta

Ugrad 2023

After: Princeton MSE

Arata Jingu

Intern 2022

After: Saarland PhD

Noor Amin

Ugrad 2023

After: Riot Games

Archit Tamhane

Highschool 2024

After: Harvard Ugrad

Zoe Liu

Master 2021

After: Apple

Dr. Keigo Ushiyama

Visiting PhD 2023

After: UTokyo PostDoc

Jacob Serfaty

Ugrad 2022-24

After: UChicago

Gene Kim

Ugrad Intern 2024

After: MIT PhD

Haley Breslin

Ugrad 2025

Siya Choudhary

Highschool 2024

After: UIUC UGrad

Aryan Gupta

Highschool 2024

After: UIUC UGrad

Please click here to see even more of our lovely alumni!

Apply

We are always looking for exceptional students at the intersection of Computer Science / Human-Computer Interaction but also, Electrical Engineering, Neuroscience, Physics, Materials Science, and Mechanical Engineering. You do not need prior publications to apply to our lab, but you need to like doing research and science (which you can demonstrate not only via publications but by means of projects you did, tools you built, circuits you made, etc). If you are interested in applying, read all the information carefully on our applications page.

We have undergraduate / intern programs with many universities (e.g., Fudan, Keio, NTU, Peking, University of Hong Kong (ShenZhen), Electro-Communications Tokyo, University of Science and Technology of China, Tsukuba, Tsinghua, and Zhejiang), so please check this page for information and deadlines).

Supported by

Our lab is supported by the following sponsor organizations:

NSF

2021 NSF CAREER Award;

Jas and Jasmine (GRFP);

our work with SANDLab.

Sloan Foundation

2022 Sloan Fellowship

supports our work

Sony

Faculty research award

supports EMS/AR work

UCWB

Supports our work

on EMS learning

Supports our sensory

substitution work

JSPS

Jun and Akifumi

were supported by JSPS

CDAC

Supports our projects with

Prof. Sihong Wang (PME) and

Prof. Dr. Andrey Rzhetsky (BSD)

Big Ideas Generator

Supports our research

on wearable haptics

More links

Trying to find info about something else? Here's a list of links for you:

- XR meetup (informal meetup about XR at CHI, UIST, SIGGRAPH)

- Ask-me-anything on "Being a new faculty/starting a new lab" by Prof. Pedro Lopes at UIST 2022 (also at Stanford HCI Retreat)

- Complete list of press articles about our work

- Like our youtube videos showing multiple demos in one take? They are all here.

Contact us / Visit us

- Coming for a visit? Here's a step by step guide to find the lab.

- John Crerar Library, 292

- Computer Science Department

- 5730 S. Ellis Avenue

- Chicago, IL 60637, USA.