Human Computer Integration Lab

Computer Science Department, University of Chicago

Essays (long-form writings from our lab)

(Back to all essays)Essay #3: What happens at the intersection of Human-Computer Interaction and Neuroscience? A panel at CHI and a meeting of the minds at in Japan by Prof. Pedro Lopes

21st June, 2025

TLDR: I argue that to advance the next

generation of computer interfaces, one where devices integrate directly

with our body, we must dive into neuroscience!

HCI x

Neuroscience?

My lab has become known for bridging parts of

interface design & hardware engineering with methods & ideas from

neuroscience. At first, this felt purely stylistic—maybe this is just my own

approach to computer interfaces. Still, it certainly is far from mainstream in Human-Computer

Interaction (if anything, the primary way the field seems to operate is from a powerful

design perspective).

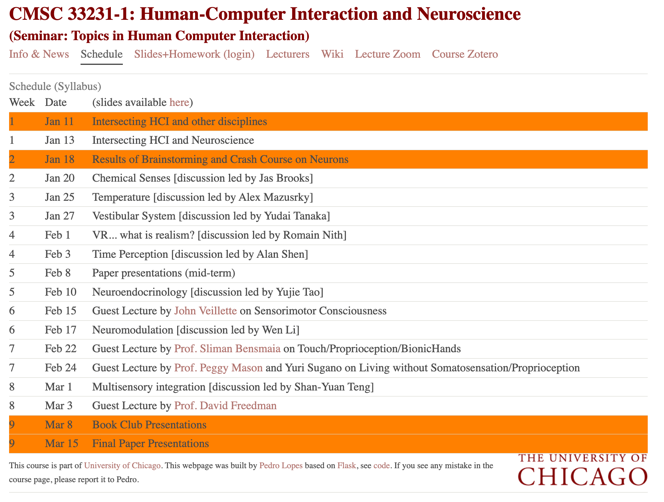

A few years ago, I decided to see how might students

from Human-Computer Interfaces resonate with this idea that much knowledge

exists at the intersection of Neuroscience and HCI. To explore this, I taught a

seminar class at the University of Chicago entitled “Human-Computer Interaction x Neuroscience”

(CMSC 33231-1) where the “x” stood for “meets” rather than a combative “versus”.

In it, I provoked students (from Computer Science, Cognitive Science, Chemistry,

and Media Arts) to find insights in Neuroscience that enabled them to rethink

computer interfaces.

The syllabus, shown in the image (which you can click to enlarge), started with

my own “crash course” on neurons. Then, the class rapidly turned into dives of

specific subdomains: chemical senses, vestibular system, thermal regulation,

time perception, neuroendocrinology, consciousness, neuromodulation, touch &

proprioception, visual perception, and much more. With the immense pleasure of

having both the students, themselves, lecture to each other, as well as distinguished

guests such as Prof. Peggy

Mason, (newly minted) Dr. John

Veillette, Prof. David Freedman,

and the late Prof.

Sliman Bensmaia.

The syllabus, shown in the image (which you can click to enlarge), started with

my own “crash course” on neurons. Then, the class rapidly turned into dives of

specific subdomains: chemical senses, vestibular system, thermal regulation,

time perception, neuroendocrinology, consciousness, neuromodulation, touch &

proprioception, visual perception, and much more. With the immense pleasure of

having both the students, themselves, lecture to each other, as well as distinguished

guests such as Prof. Peggy

Mason, (newly minted) Dr. John

Veillette, Prof. David Freedman,

and the late Prof.

Sliman Bensmaia.

At first glance, the tower of abstractions allows for an

HCI researcher like me to forget about what’s happening deep down at the neuron

level. However, through this class, my lab’s work, and my initial work in neuroscience

(e.g., see our fMRI paper on agency

during electrically-induced movements at Cerebral Cortex), led me to

believe there was much to gain by, precisely, embracing the complexity and wealth

of knowledge in neuroscience.

In fact, after having these stellar neuroscientists

speak in my class, it became evident: We are trying to answer many of the same

questions but with different lenses & words!

NeuroCHI: a panel

at ACM CHI 2024

One way I

decided to explore this idea was to bring this intersection of fields to the

forefront of our largest conference: ACM CHI (the flagship publication venue

& conference in the field of Human-Computer Interaction). Thus, together

with my PhD student Yudai Tanaka, I organized

a panel at ACM CHI. You can read about this panel at my lab’s website or in this extended abstract

for CHI. For this panel, we invited Prof. Pattie Maes

(MIT Media Lab), Prof. Olaf

Blanke (EPFL), Prof. Rob

Jacob (Tufts), and Dr. Sho

Nakagome (Meta Reality Labs). The panel served to surface examples of what

we might gain by exploring a neuroscience-inspired path towards interface

design. Particularly outstanding was Olaf’s input, which confirmed my firm

belief that there was much to gain from the interaction of these two fields (examples

of his

work at this intersection include insights that have provided much

understanding and inspiration to, for instance, the field of virtual reality). While,

to my surprise, the panel gathered a large audience at CHI, the mission was not

to just tell my fellow HCI researchers about neuroscience, but to bridge

the two fields.

One way I

decided to explore this idea was to bring this intersection of fields to the

forefront of our largest conference: ACM CHI (the flagship publication venue

& conference in the field of Human-Computer Interaction). Thus, together

with my PhD student Yudai Tanaka, I organized

a panel at ACM CHI. You can read about this panel at my lab’s website or in this extended abstract

for CHI. For this panel, we invited Prof. Pattie Maes

(MIT Media Lab), Prof. Olaf

Blanke (EPFL), Prof. Rob

Jacob (Tufts), and Dr. Sho

Nakagome (Meta Reality Labs). The panel served to surface examples of what

we might gain by exploring a neuroscience-inspired path towards interface

design. Particularly outstanding was Olaf’s input, which confirmed my firm

belief that there was much to gain from the interaction of these two fields (examples

of his

work at this intersection include insights that have provided much

understanding and inspiration to, for instance, the field of virtual reality). While,

to my surprise, the panel gathered a large audience at CHI, the mission was not

to just tell my fellow HCI researchers about neuroscience, but to bridge

the two fields.

A meeting of

the (HCI & Neuro) minds

To create a bridge

between these two fields, I organized a meeting (the so-called NII’s Shonan meeting) in Japan to catalyze

interactions between Human-Computer Interaction and Neuroscience.

To create a bridge

between these two fields, I organized a meeting (the so-called NII’s Shonan meeting) in Japan to catalyze

interactions between Human-Computer Interaction and Neuroscience.

The meeting (of course) titled HCI x Neuroscience aimed

to provide a venue for people from many subdisciplines of HCI and Neuroscience

to discuss in a long format (~5 days).

The meeting is about to start tomorrow and we have a

stellar set of participants who will share in this first exploration of HCI x

Neuro, namely my fellow co-organizers: Dr. Shunichi Kasahara, Prof. Takefumi

Hiraki, Dr. Shuntaro Sasai; our student organizer Yudai Tanaka; as well as our

guests: Prof. Misha Sra, Dr. Artur Pilacinski, Prof. Wilma A. Bainbridge, Prof.

Olaf Blanke, Prof. Tom Froese, Prof. Hideki Koike, Dr. Yuichi Hiroi, Prof.

Takashi Amesaka, Prof. Alison Okamura, Prof. Yoichi Miyawaki, Dr. Lukas Gehrke,

Dr. Tamar Makin, Prof. Howard Nusbaum, Prof. Momona Yamagami, Prof. Norihisa

Miki, Prof. Peggy Mason, Dr. Takeru Hashimoto, Prof. Rob Lindeman, Prof. Greg

Welch, Prof. Eduardo Veas, Prof. Tiara Feuchtner, and Prof. Nobuhiro Hagura. I

will keep all the exciting outcomes for a future essay on this meeting alone, since

the goal of this essay is to provide some framing to motivate the intersection

of HCI and Neuroscience.

Why intersect

HCI x Neuro… now?

One aspect that requires pause is why I believe that

the intersection of HCI with Neuroscience has become important now. Why not 50

years ago, when computing interfaces were taking the world by storm with the

invention of the desktop interface?

I’d argue time is ripe now, and understanding it will

take us back into the history of HCI. Today, computers are seen as everyday

tools. No other modern tool has experienced this level of widespread

adoption—computers are used nearly anytime & anywhere and by nearly

everyone. But this did not happen overnight, and to achieve massive adoption,

it was not only the hardware and software that needed to improve, but

especially the user interface.

Evolution of the interface. In the early

days of computing, computers took up entire rooms, and interacting with them

was slow and sparse—users inputted commands to the computer in the form of text

(written via punch-cards and later via terminals) and received computations

after minutes or hours (in the form of printed text). The result was that

computers stayed as specialized tools that required tremendous expertise to

operate. To enter an era of wider adoption, many advances were needed (e.g.,

miniaturization of electronic components), especially, the invention of a new

type of human-computer interface that allowed for a more expressive

interaction—the graphical user interface (with its graphical user interface

elements, many of which we still use today, e.g, icons, folders, desktop, mouse

pointer) [1].

This revolution led to a proliferation of computers as

office-support tools—because interactions were much faster and the interface

feedback was immediate, users were now spending eight hours a day with a

computer, rather than just a few minutes while entering/reading text. Still,

computers were stationary, and users never carried desktop computers around.

More recently, not only the steady miniaturization of components boosted

portability, but a new interface revolutionized the way we interact: the

touchscreen—by touching a dynamic screen rather than using fixed keys it enables

an extreme miniaturization of the interface component (no keyboard needed);

leading to the most widespread device in history: the smartphone. Using

smartphones, users no longer interact on computers only at their workplace’s

desk, but carry them around all the waking hours of the day and use them

anytime and anywhere.

What drives interface revolutions? I posit that

three factors are driving interfaces: (1) hardware miniaturization—a

corollary of Moore’s law; (2) maximizing the user interface—almost every

single inch of a computer is now used for user interactions, e.g., virtually the

entire surface of a smartphone is touch-sensitive or enables special

interactions (remember the back of the phone is now prime space for cameras, tap-to-pay

or other wireless charging hardware); and, (3) interfaces are closer to the

user’s body—this is best demonstrated by the fact that early users of

mainframe computers interacted with machines only for a brief period of the

day, while modern users interact with computers for 10+ hours a day, including

work and leisure.

Devices integrating with the user’s body. The revolution

of the interface did not end here, and recently, we can observe these three

trends being extrapolated again as a new interface paradigm is being actualized

in the mainstream: the wearable devices (e.g., smartwatches, VR/AR, etc). To

arrive at this type of interactive device, (1) the hardware was miniaturized

(especially MEMS), (2) the interface was maximized, especially by using

motion-sensors, 3D-cameras and haptic actuators, new interaction modalities

were unlocked such as gestures and rich haptic feedback (e.g., vibration), and

(3) the new interface was designed to be even closer to users, physically

touching their skin, allowing them to wear a computer 24h a day—in fact,

wearables excel at tasks where smartphones cannot, such as determining a user’s

physiological state (e.g., heartbeat, O2 levels, etc.) or extremely fast

interactions (e.g., sending a message to a loved one while jogging).

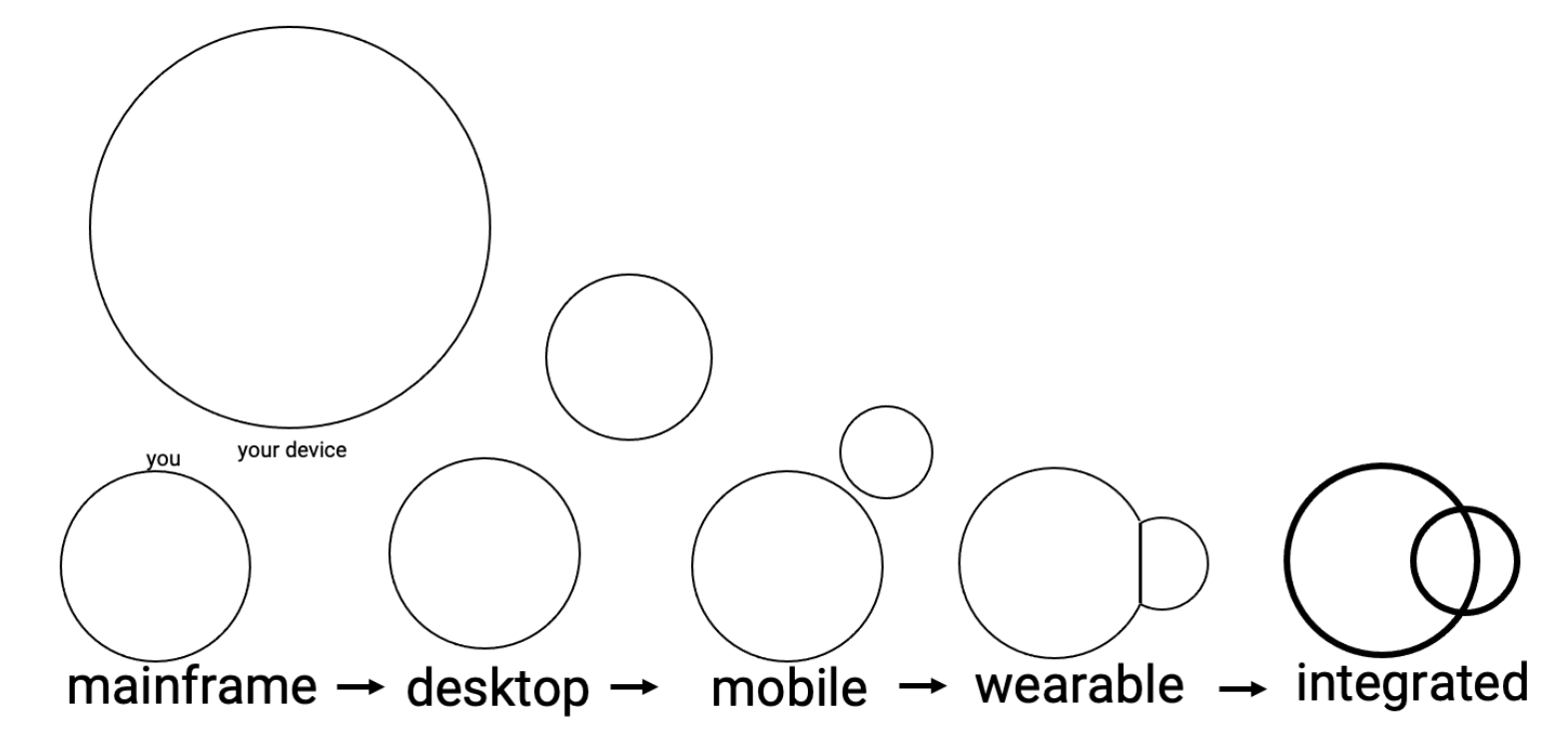

The next evolutionary step for interfaces. For years, I’ve

laid out this evolution of computers in a diagrammatic form, depicted in the figure

below (taken from my work [2]). This diagram asks a pressing question for the

field of computing: What shape will the next type of interface take? If

we extrapolate our three arguments (i.e., smaller hardware, maximize I/O, and

closer to the user’s body), then we can see on the horizon that the computer’s

interface will integrate directly with the user’s biological body.

So, why does HCI need Neuroscience now? While the

previous interface revolutions (desktop, mobile, wearable) could be solved by

computer engineers & human-computer interaction researchers, this next

revolution requires a new expertise—it requires neuroscience. I argue we

close in on a hard barrier—the complexity of the human body & biology—requiring

a turn to Neuroscience to form a deeper understanding of how to design these

neural interfaces (e.g., any such system design to interface with brain

signals, muscle signals, pain signals, etc.).

References

[1] Umer Farooq and Jonathan Grudin. 2016. Human-computer

integration. interactions 23, 6 (November-December 2016), 26–32. https://doi.org/10.1145/3001896

[2] Florian Floyd Mueller, Pedro Lopes, et a.. 2020. Next Steps

for Human-Computer Integration. In Proceedings of the 2020 CHI Conference on

Human Factors in Computing Systems (CHI '20). Association for Computing

Machinery, New York, NY, USA, 1–15. https://doi.org/10.1145/3313831.3376242