Human Computer Integration Lab

Computer Science Department, University of Chicago

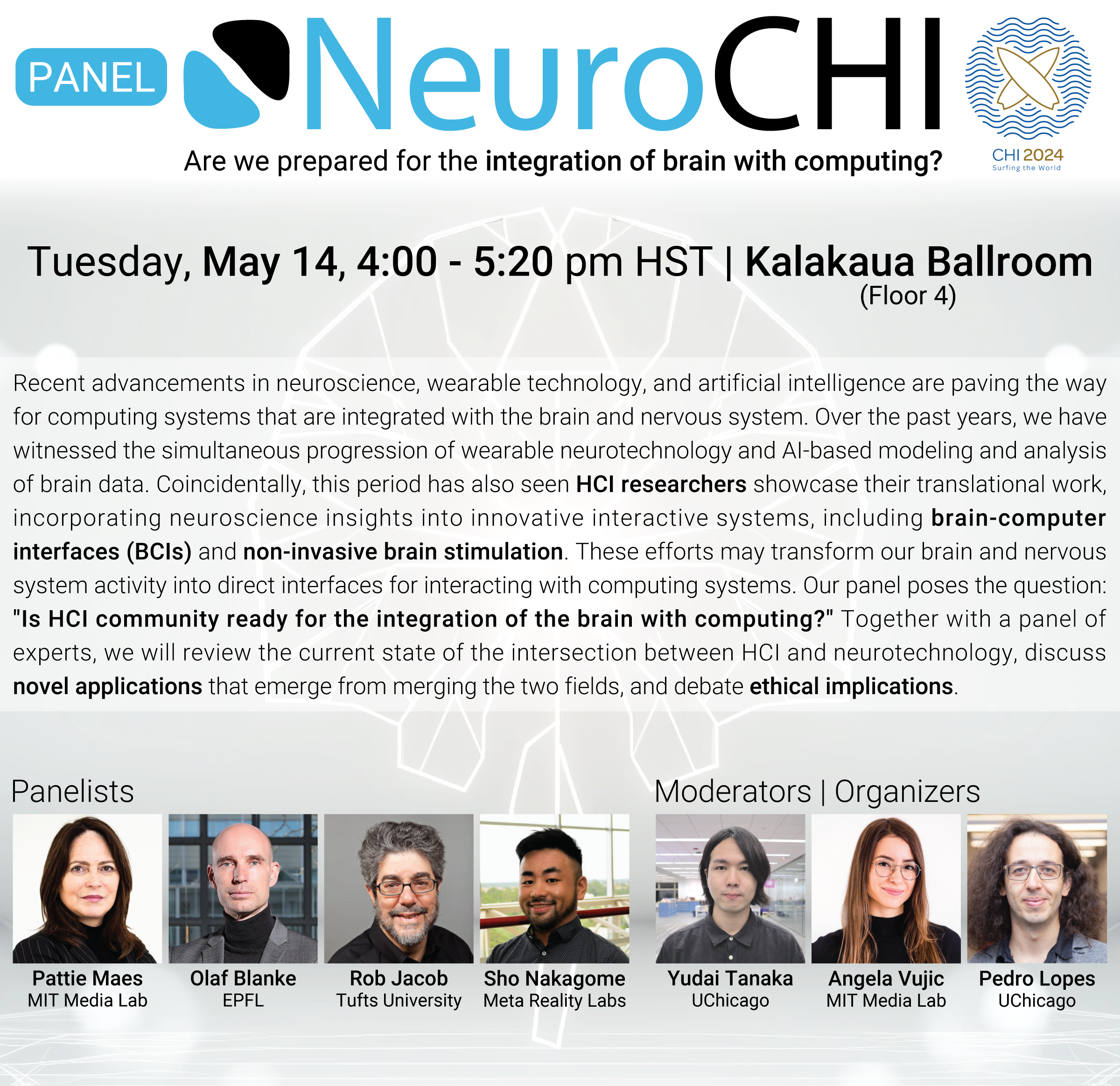

NeuroCHI: the Intersection of HCI and Neuroscience

Our group (with the help of our collaborators) organized the first NeuroCHI. Where we asked the question: what happens at the intersection of Human-Computer Interaction and Neuroscience?. Our first edition of NeuroCHI is a panel at ACM CHI 2024, which is shown below and here in the SIGCHI program

Our CHI 2024 Panel co-institutions

Examples of our lab's approach to intersecting neuroscience and HCI

Publications

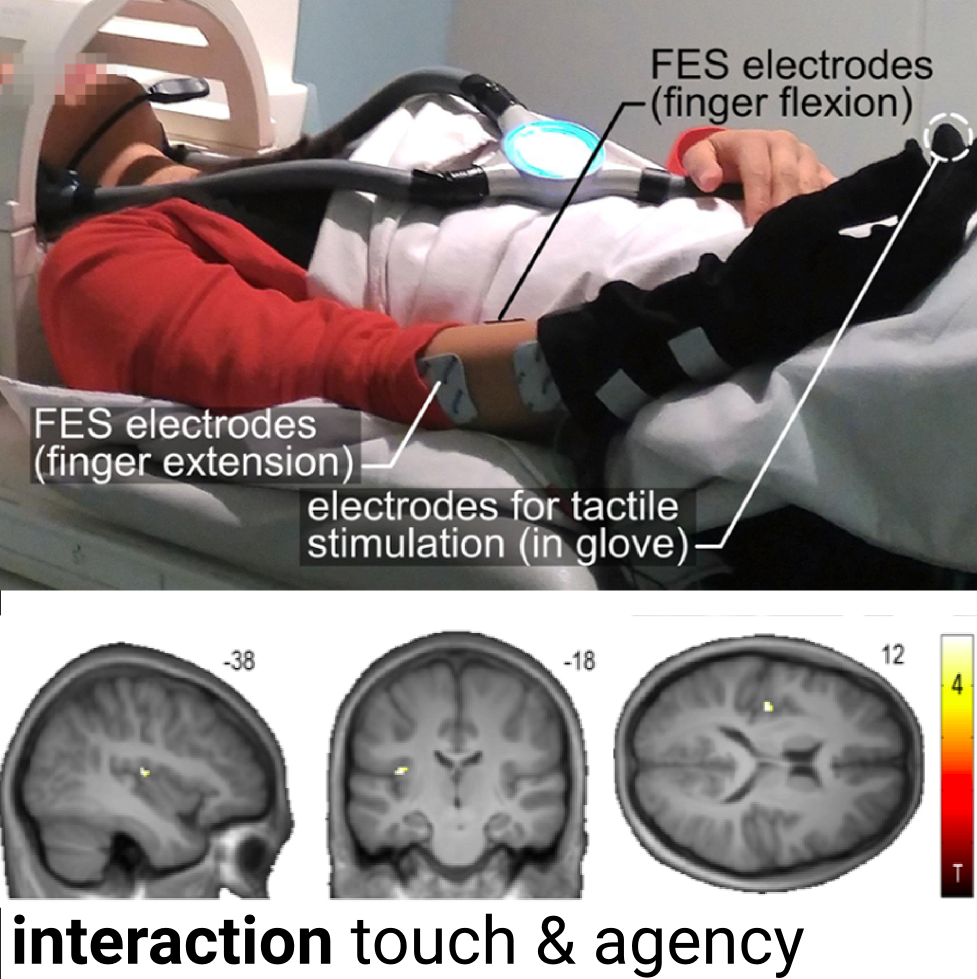

Action-dependent processing of touch in the human parietal operculum

Jakub Limanowski, Pedro Lopes, Janis Keck, Patrick Baudisch, Karl Friston, and Felix Blankenburg. In Cerebral Cortex (journal), to appear.

Tactile input generated by one’s own agency is generally attenuated. Conversely, externally caused tactile input is enhanced; e.g., during haptic exploration. We used functional magnetic resonance imaging (fMRI) to understand how the brain accomplishes this weighting. Our results suggest an agency-dependent somatosensory processing in the parietal operculum.

Preemptive Action: Accelerating Human Reaction using Electrical Muscle Stimulation Without Compromising Agency

Shunichi Kasahara, Jun Nishida and Pedro Lopes. In Proc. CHI’19, Paper 643 (full paper) and demonstration at SIGGRAPH'19 eTech.

Grand Prize, awarded by Laval Virtual in partnership with SIGGRAPH'19 eTech.We found out that it is possible to optimize the timing of haptic systems to accelerate human reaction time without fully compromising the user' sense of agency. This work was done in cooperation with Shunichi Kasahara from Sony CSL. Read more.

CHI'19 paper video SIGGRAPH'19 etech (soon) CHI'19 talk (slides) CHI talk video

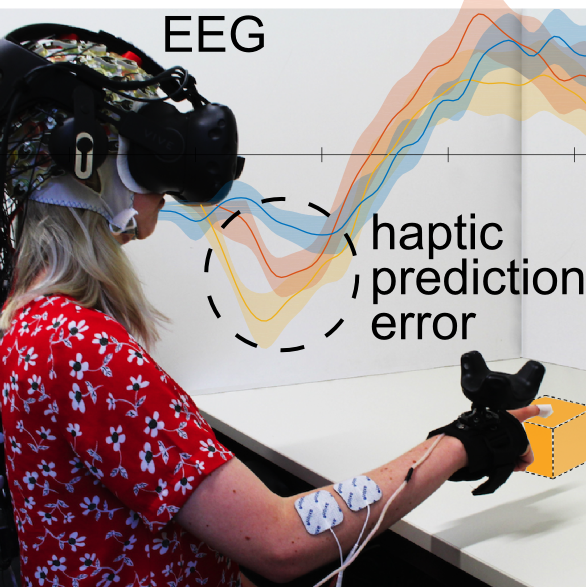

Detecting Visuo-Haptic Mismatches in Virtual Reality using the Prediction Error Negativity of Event-Related Brain Potentials

Lukas Gehrke, Sezen Akman, Pedro Lopes, Albert Chen, ..., Klaus, Gramann. In Proc. CHI’19, Paper 427. (full paper)

We detect visuo-haptic mismatches in VR by analyzing the user's event-related potentials (ERP). In our EEG study, participants touched VR objects and received either no haptics, vibration, or vibration and EMS. We found that the negativity component (prediction error) was more pronounced in unrealistic VR situations, indicating visuo-haptic mismatches.

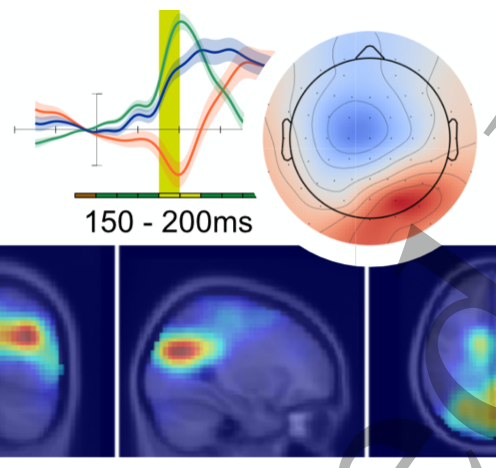

Neural Sources of Prediction Errors Detect Unrealistic VR Interactions

Lukas Gehrke, Pedro Lopes, Marius Klug, Sezen Akman and Klaus Gramann. In Journal of Neural Engineering (full paper)

In VR, designing immersion is one key challenge. Subjective questionnaires are the established metrics to assess the effectiveness of immersive VR simulations. However, administering questionnaires requires breaking the immersive experience they are supposed to assess. We present a complimentary metric based on a ERPs. For the metric to be robust, the neural signal employed must be reliable. Hence, it is beneficial to target the neural signal's cortical origin directly, efficiently separating signal from noise. To test this new complementary metric, we designed a reach-to-tap paradigm in VR to probe EEG and movement adaptation to visuo-haptic glitches. Our working hypothesis was, that these glitches, or violations of the predicted action outcome, may indicate a disrupted user experience. Using prediction error negativity features, we classified VR glitches with ~77% accuracy. This work was a collaboration led by Klaus Gramann and his team at the Neuroscience Department at the TU Berlin.

Journal of Neural Engineering 2022

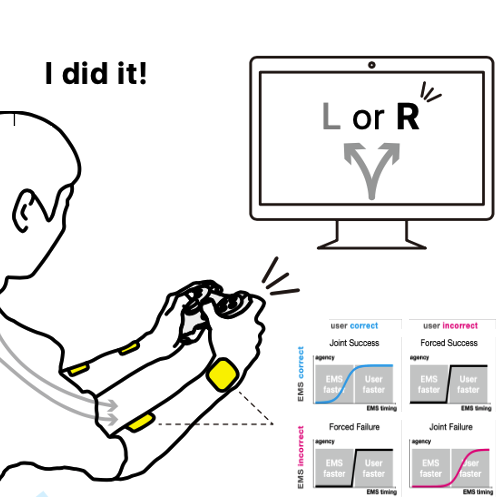

Whose touch is this?: Understanding the Agency Trade-off Between User-driven touch vs. Computer-driven Touch

Daisuke Tajima, Jun Nishida, Pedro Lopes, and Shunichi Kasahara. In Transactions of CHI'21 (full paper)

Force-feedback interfaces actuate the user's to touch involuntarily (using exoskeletons or electrical muscle stimulation); we refer to this as computer-driven touch. Unfortunately, forcing users to touch causes a loss of their sense of agency. While we found that delaying the timing of computer-driven touch preserves agency, they only considered the naive case when user-driven touch is aligned with computer-driven touch. We argue this is unlikely as it assumes we can perfectly predict user-touches. But, what about all the remainder situations: when the haptics forces the user into an outcome they did not intend or assists the user in an outcome they would not achieve alone? We unveil, via an experiment, what happens in these novel situations. From our findings, we synthesize a framework that enables researchers of digital-touch systems to trade-off between haptic-assistance vs. sense-of-agency. Read more at our project's page (we have six other papers on this topic).

TOCHI'21 paper video (presented at CHI'22)

Affective Touch as Immediate and Passive Wearable Intervention

Yiran Zhao, Yujie Tao, Grace Le, Rui Maki, Alexander Adams, Pedro Lopes, and Tanzee Choudhury. In Proc. UbiComp'23 (IMWUT) (full paper)

We investigated affective touch as a new pathway to passively mitigate in-the-moment anxiety. While existing mobile interventions offer great promises for health and well-being, they typically focus on achieving long-term effects such as shifting behaviors--thus, not applicable to give immediate help, e.g., when a user experiences a high anxiety level. To this end, we engineered a wearable device that renders a soft stroking sensation on the user's forearm. Our results showed that participants who received affective touch experienced lower state anxiety and the same physiological stress response level compared to the control group participants. This work was a collaboration led by Tanzeem Choudhury and her team at Cornell.

Ubicomp'23 (IMWUT) paper

Full-hand Electro-Tactile Feedback without Obstructing Palmar Side of Hand

Yudai Tanaka, Alan Shen, Andy Kong, Pedro Lopes. In Proc. CHI'23 (full paper)

CHI best paper award (top 1%)

This technique renders tactile feedback to the palmar side of the hand while keeping it unobstructed and, thus, preserving manual dexterity. We implement this by applying electro-tactile stimulation without any electrodes on the palmar side, yet that is where tactile sensations are felt. This creates tactile sensations on 11 different places of the palm and fingers while keeping the palms free for dexterous manipulations (e.g., VR with props, AR with tools and more).

CHI'23 paper video CHI talk video hardware files

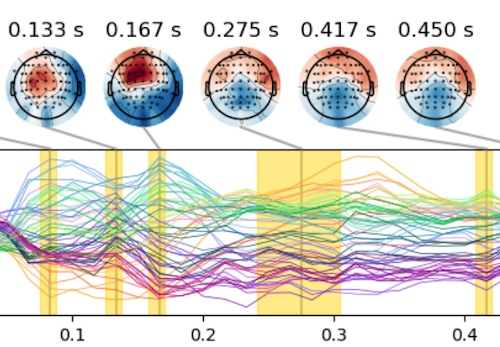

Temporal Dynamics of Brain Activity Predicting Sense of Agency over Muscle Movements

John P. Veillette, Pedro Lopes, Howard C. Nusbaum. In Proc. Journal of Neuroscience'23 (full paper)

We investigate the time course of neural activity that predicts the sense of agency over electrically actuated movements. We find evidence of two distinct neural processes–a transient sequence of patterns that begins in the early sensorineural response to muscle stimulation and a later, sustained signature of agency. This work is part of our lab's exploration on how future interactive devices need be designed to prioritize the user's sense of agency, read more at our project's page (we have six other papers on this topic).

Jneuro'23 paper code

Interactive Benefits from Switching Electrical to Magnetic Muscle Stimulation

Yudai Tanaka, Akifumi Takahashi, Pedro Lopes. In Proc. UIST'23 (full paper)

After 10 years of working on electrical muscle stimulation, we wanted to take a introspective and attempt to uncover any benefits gained by switching from electrical (EMS) to magnetic muscle stimulation (MMS). While much ink has been spilled about the advantages of EMS, not much work has investigated circumventing its key limitations: electrical impulses also cause an uncomfortable "tingling" sensation; EMS relies on pre-gelled electrodes, which require direct contact with the user’s skin, and dry up quickly. To tackle these limitations, we study force-feedback based on magnetic muscle stimulation, which we found to reduce uncomfortable tingling and enable stimulation over the clothes.

UIST'23 paper video code UIST talk video

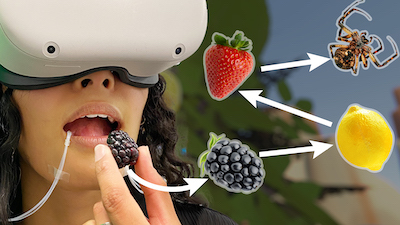

Taste Retargeting via Chemical Taste Modulators

Jas Brooks, Noor Amin, Pedro Lopes. In Proc. UIST'23 (full paper)

UIST Honorable mention award for Demo (Jury's choice)

Taste retargeting selectively changes taste perception using taste modulators—chemicals that temporarily alter the response of taste receptors to foods and beverages. As our technique can deliver droplets of modulators before eating or drinking, it is the first interactive method to selectively alter the basic tastes of real foods without obstructing eating or impacting the food’s consistency. It can be used, for instance, to enable a single food prop to stand in for many virtual foods. For instance, it can transform a pickled blackberry in: a lemon (by decreasing sweetness with lactisole), a strawberry (by transforming sour to sweetness with miraculin), and much more.

Haptic Source-effector: Full-body Haptics via Non-invasive Brain Stimulation

Yudai Tanaka, Jacob Serfaty, Pedro Lopes. In Proc. CHI'24 (full paper)

CHI honorable mention for best paper award (top 5%)

We propose a novel concept for haptics in which one centralized on-body actuator renders haptic effects on multiple body parts by stimulating the brain, i.e., the source of the nervous system—we call this a haptic source-effector, as opposed to the traditional wearables’ approach of attaching one actuator per body part (end-effectors). We implement our concept via transcranial-magnetic-stimulation. Our approach renders ∼15 touch/force-feedback sensations throughout the body (e.g., hands, arms, legs, feet, and jaw—which we found in our user study), all by stimulating the user’s sensorimotor cortex with a single magnetic coil moved mechanically across the scalp.

CHI'24 paper video CHI talk video (includes demo)

SplitBody: Reducing Mental Workload while Multitasking via Muscle Stimulation

Romain Nith, Yun Ho, Pedro Lopes. In Proc. CHI'24 (full paper)

CHI best paper award (top 1%)

Electrical muscle stimulation (EMS) offers promise in assisting physical tasks by automating movements (e.g., shake a spray can that the user is using). However, these systems improve the performance of a task that users are already focusing on (e.g., users are already focused the spray can). Instead, we investigate whether these muscle stimulation offer benefits when they automate a task that happens in the background of the user’s focus. We found that automating a repetitive movement via EMS can reduce mental workload while users perform parallel tasks (e.g., focusing on writing an essay while EMS stirs a pot of soup).

CHI'24 paper video CHI talk video (includes demo)A more exhaustive press coverage list of our lab's projects can be found here.