Human Computer Integration Lab

Computer Science Department, University of Chicago

Understanding Agency in Haptic Devices

Our group (with the help of our collaborators) attempts understanding the loss of agency when using haptic devices capable of actuating the user's body involuntarily. We asked ourselves the question: how does a user feel when one is moved by an external force, such when you are using an exoskeleton or electrical muscle stimulation? We try to answer the following questions: (1) can haptic systems actuate us to provide a significant faster reaction time without always entirely compromising our sense of agency? (CHI'19); (2) How does our brain integrate haptic feedback when moved by an external force such as EMS? (Cortex'19 and CHI'19); (3) do these systems speed us up permanentely or just when I am wearing the device? (CHI'21); (4) are there optimal ways to learn from this speed up so as to preserve the speedup even when I remove the device? (CHI'21); (5) what happens to agency when choice is involved? (TOCHI'21); and (6) how does the brain process movements that are not initiated by ourselves? (JNeuro'23). You can jump directly to our publications on agency. |

The impact of haptic devices (especially EMS) in the sense of agency

Motivation: More than years have passed since researchers started using electrical muscle stimulation (EMS) in interactive systems. Kruijff et al. (VRST'06) explored how these medical-inspired devices could change gaming on a desktop by allowing the user's muscles to contract in response to game events. This fueled researchers such as Emi Tamaki & Jun Rekimoto to open the doors of EMS to the CHI community (CHI'11). Ten years later, the HCI community found remarkable new applications for EMS: Max Pfeiffer & Michael Rohs explored how to steer participants, Jun Nishida & Kenji Suzuki enabled communicating gestures from person to person, and myself together with Patrick Baudisch explored how to turn the user's body into input and output devices (just to cite a few, for a more detailed timeline of the many contributing faces of EMS in HCI, see Pedro's PhD thesis). Furthermore, while EMS is certainly a more recent approach to force feedback, exoskeletons and other motor-based haptic devices have a long history.

Our goal: however, ten years after EMS' appearance in the HCI scene and after decades of exoskeletons, it's about time we talk about agency. When it comes to EMS, these haptic systems offer a compact and wearable form factor (when compared to their mechanical haptic counterparts, such as exoskeletons) but being moved by an external force: feels weird. If you were ever moved by some haptic actuated device (be it EMS, exoskeleton or a robotic arm) you probably felt how strange it is to see and feel your body being moved by an external cause. In other words: you feel no sense of agency.

To answer why this happens we turned to two core questions: (1) can haptic systems actuate us to provide a significant faster reaction time without always entirely compromising my agency? (2) How does our brain integrate haptic feedback when moved by an external force such as EMS?

1. Preemptive Agency (CHI'19)

We explore how delaying the onset of the haptic actuation dramatically improves the sense of agency! Despite being a first step to understand the relationship between agency and preemptive, we think these results are really exiting; they allow us to build a model to choose how much to sacrifice agency to gain reaction time speed ups, and vice versa! We demonstrated that by delaying the onset of haptic actuation systems (such as EMS) we can accelerate human reaction time without compromising the user's agency! (video here)

Now that we understood that it is possible to preserve a partial sense of agency while, still, allowing a user to react faster, we explored a series of applications that benefit from this. For instance, we demonstrated how a user can use our finding to take a photograph of a high speed object in mid air (we demonstrated this at SIGGRAPH eTech'19 and received the Laval Virtual Award for it).

2. Understanding Haptic Mismatches via Brain Evoked Related Potentials (CHI'19)

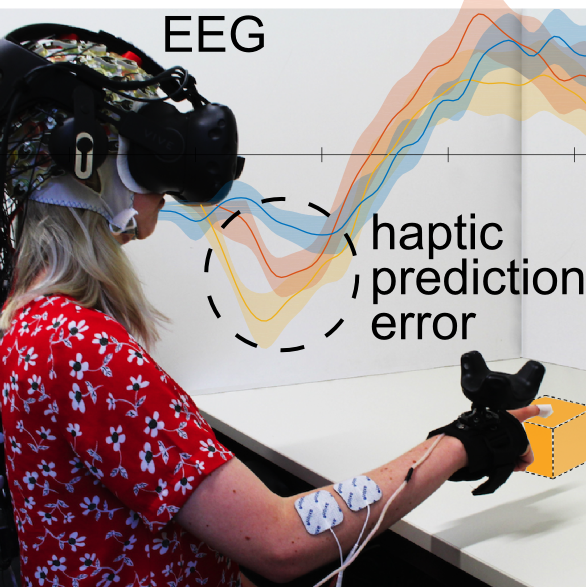

Going deeper into the question of agency in haptic interfaces, one might ask: but how does our brain integrate and process these haptic signals? We uncovered another small piece of the haptic agency puzzle while trying to understand how to detect mismatches in virtual reality (VR) without having to ask user's about their subjective experience; i.e., can we evaluate the coherence of a visuo-haptic VR experience without having to show you a presence questionnaire?

In this project, instead of asking the user, we measured their brain's responses, using EEG, as their interacted with VR objects that exhibited also haptic feedback (in fact, vibration and/or EMS). We found out that when there is a mismatch between visuals and haptics (e.g., out of sync) our brain's event related potential (ERP) looks very different from when things feel right. In fact, there is a pronounced negative valley in the user's ERP when things are off from our expectations. We found out that we can use EEG to detect mismatches between visuals and haptic while a user is interacting with a virtual environment. This is a very different way to understand realism in VR, which does not rely on asking user's questions.

It is precisely this mismatch in expectations, which we observed even when user's did not consciously realize this were off, that might help us understand the puzzle of agency and EMS. These unrealistic situations might be very similar to when we are moved by an inexplicable external force, such as EMS.

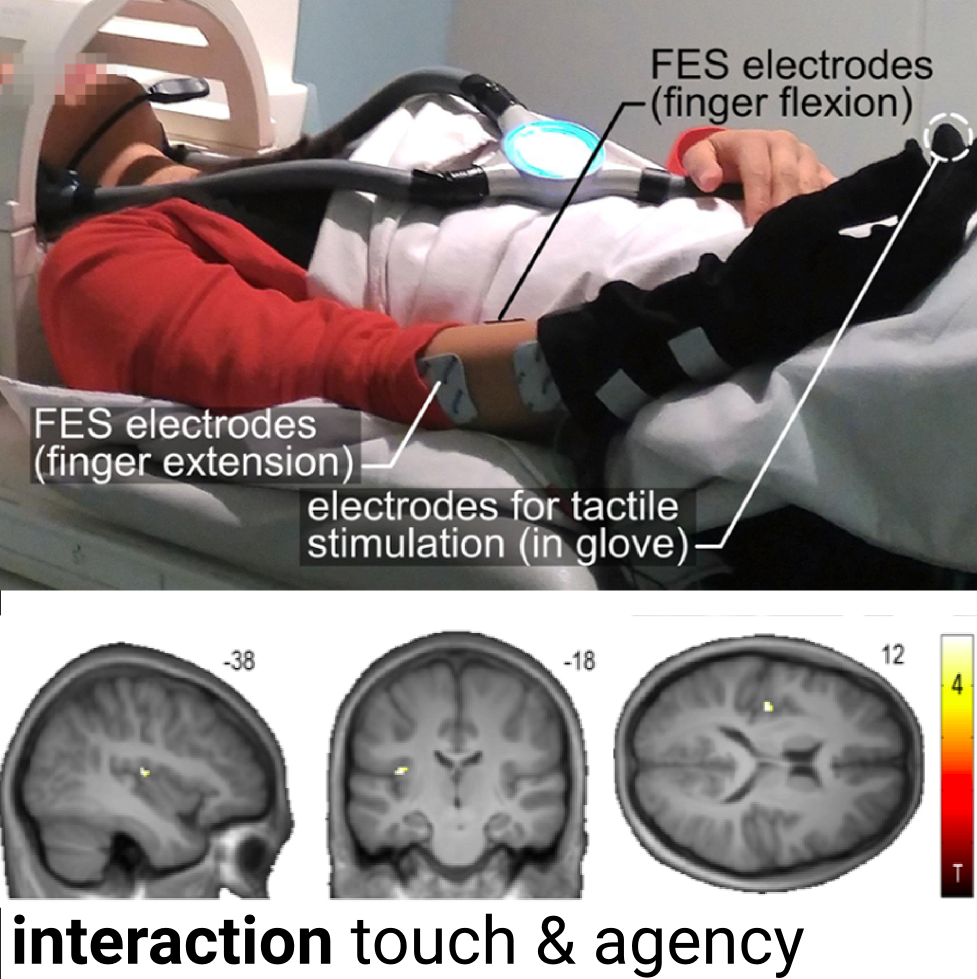

3. Agency-dependent processing of touch (Cortex'19)

Going even deeper into the neural processes, with the assistance of functional magnetic resonnance imaging (fMRI) we can examine our brain at work when we are moved by external forces like EMS. We used fMRI to examine how agency (i.e., whether you moved yourself consciously or EMS moves you) impacts how our brain interpret and integrates sensory information such as touch sensations!

Our findings shine some light into an old sensory puzzle: tactile input generated by one’s own agency is generally attenuated; conversely, externally caused tactile input is enhanced; e.g., during haptic exploration. Our results suggest an agency-dependent somatosensory processing in the parietal operculuml in other words: agency drives tactile perception too.

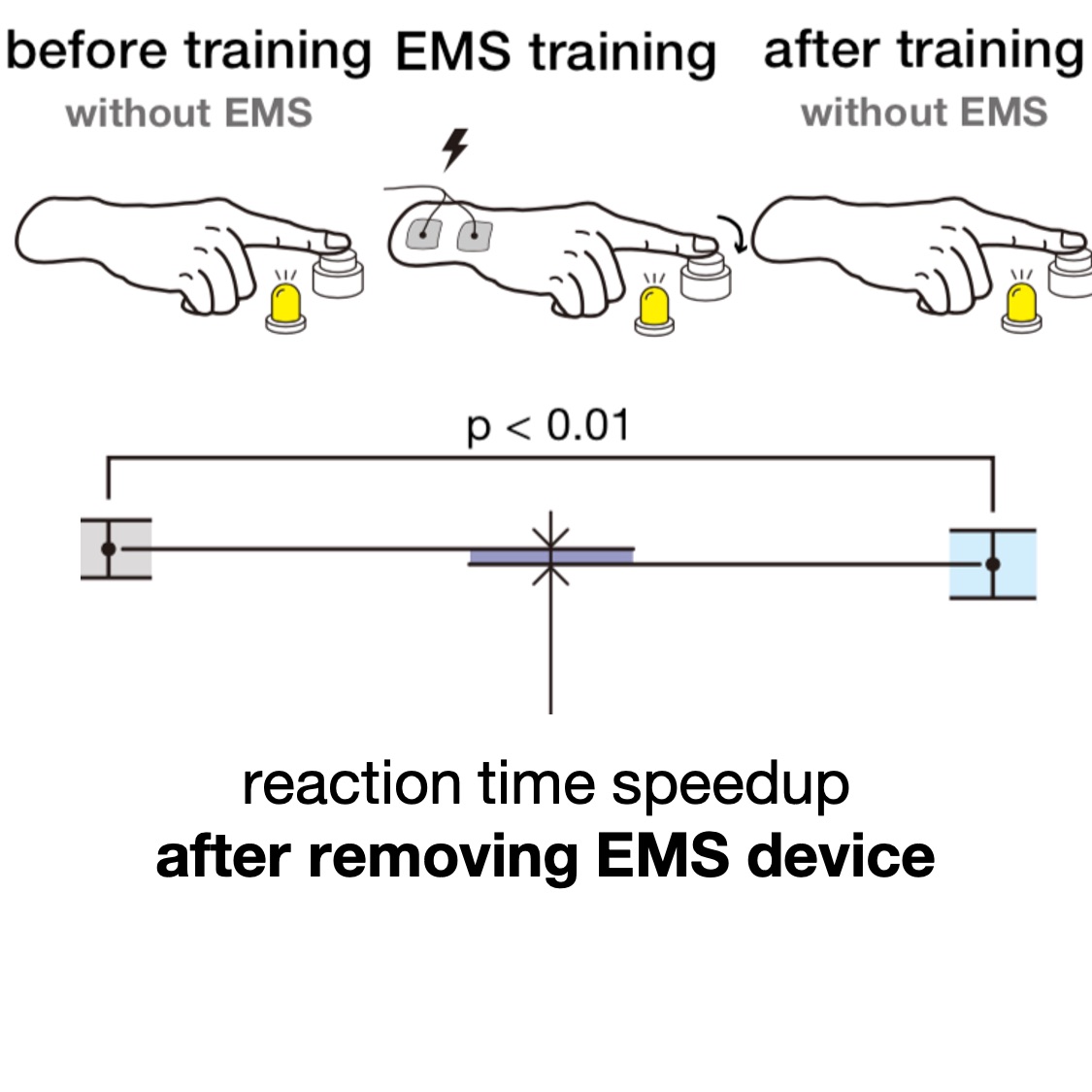

4.Agency is key to speedups after removing the EMS device! (CHI'21)

Now that we have estabelished that it was possible to speedup someone's reaction time while wearing EMS, we turn to what happens when they remove the device. We found out that after training for reaction time tasks with EMS, one's reaction time is acceleerated even after you remove the EMS device. What is remarkable is that the key to the optimal speedup is not applying EMS as soon as possible (traditional view on EMS stimulus timing) but to delay the EMS stimulus closer to the user's own reaction time, but still deliver the stimulus faster than humanly possible; in short, turns out that Preemptive Action is better also for retaining the effects of EMS training! (video here)

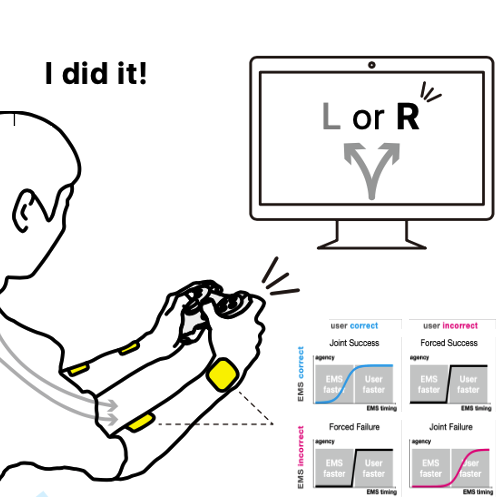

5.When choice is involved: agency is modulated by the outcome of the task (TOCHI'21)

In so far, we only considered the naive case when user-driven touch is aligned with computer-driven touch. We argue this is unlikely as it assumes we can perfectly predict user-touches. But, what about all the remainder situations: when the haptics forces the user into an outcome they did not intend or assists the user in an outcome they would not achieve alone? We unveil, via an experiment, what happens in these novel situations. From our findings, we synthesize a framework that enables researchers of digital-touch systems to trade-off between haptic-assistance vs. sense-of-agency.

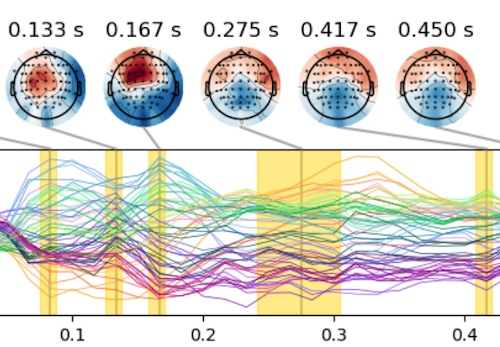

6.How does the brain's processing of movements with agency differs from electrically actuated movements? (JNeuro'23)

We investigate the time course of neural activity that predicts the sense of agency over electrically actuated movements. We find evidence of two distinct neural processes–a transient sequence of patterns that begins in the early sensorineural response to muscle stimulation and a later, sustained signature of agency. These results shed light on the neural mechanisms by which we experience our movements as volitional.

Publications

Preemptive Action: Accelerating Human Reaction using Electrical Muscle Stimulation Without Compromising Agency

Shunichi Kasahara, Jun Nishida and Pedro Lopes. In Proc. CHI’19, Paper 643 (full paper) and demonstration at SIGGRAPH'19 eTech.

Grand Prize, awarded by Laval Virtual in partnership with SIGGRAPH'19 eTech.We found out that it is possible to optimize the timing of haptic systems to accelerate human reaction time without fully compromising the user' sense of agency. This work was done in cooperation with Shunichi Kasahara from Sony CSL. Read more.

CHI'19 paper video SIGGRAPH'19 etech (soon) CHI'19 talk (slides) CHI talk video

Preserving Agency During Electrical Muscle Stimulation Training Speeds up Reaction Time Directly Afer Removing EMS

Shunichi Kasahara, Kazuma Takada, Jun Nishida, Kazuhisa Shibata, Shinsuke Shimojo and Pedro Lopes, In Proc. CHI’21 (full paper)

We found out that after training for reaction time tasks with electrical muscle stimulation (EMS), one's reaction time is acceleerated even after you remove the EMS device. What is remarkable is that the key to the optimal speedup is not applying EMS as soon as possible (traditional view on EMS stimulus timing) but to delay the EMS stimulus closer to the user's own reaction time, but still deliver the stimulus faster than humanly possible. In cooperation with our colleagues from Sony CSL, RIKEN Center for Brain Science, and CalTech.

CHI'21 paper video CHI talk video source code

Whose touch is this?: Understanding the Agency Trade-off Between User-driven touch vs. Computer-driven Touch

Daisuke Tajima, Jun Nishida, Pedro Lopes, and Shunichi Kasahara. In Transactions of CHI’21 (full paper)

Force-feedback interfaces actuate the user's to touch involuntarily (using exoskeletons or electrical muscle stimulation); we refer to this as computer-driven touch. Unfortunately, forcing users to touch causes a loss of their sense of agency. While we found that delaying the timing of computer-driven touch preserves agency, they only considered the naive case when user-driven touch is aligned with computer-driven touch. We argue this is unlikely as it assumes we can perfectly predict user-touches. But, what about all the remainder situations: when the haptics forces the user into an outcome they did not intend or assists the user in an outcome they would not achieve alone? We unveil, via an experiment, what happens in these novel situations. From our findings, we synthesize a framework that enables researchers of digital-touch systems to trade-off between haptic-assistance vs. sense-of-agency.

TOCHI'21 paper (preprint)

Action-dependent processing of touch in the human parietal operculum

Jakub Limanowski, Pedro Lopes, Janis Keck, Patrick Baudisch, Karl Friston, and Felix Blankenburg. In Cerebral Cortex (journal), to appear.

Tactile input generated by one’s own agency is generally attenuated. Conversely, externally caused tactile input is enhanced; e.g., during haptic exploration. We used functional magnetic resonance imaging (fMRI) to understand how the brain accomplishes this weighting. Our results suggest an agency-dependent somatosensory processing in the parietal operculum.

Detecting Visuo-Haptic Mismatches in Virtual Reality using the Prediction Error Negativity of Event-Related Brain Potentials

Lukas Gehrke, Sezen Akman, Pedro Lopes, Albert Chen, ..., Klaus, Gramann. In Proc. CHI’19, Paper 427. (full paper)

We detect visuo-haptic mismatches in VR by analyzing the user's event-related potentials (ERP). In our EEG study, participants touched VR objects and received either no haptics, vibration, or vibration and EMS. We found that the negativity component (prediction error) was more pronounced in unrealistic VR situations, indicating visuo-haptic mismatches.

Temporal Dynamics of Brain Activity Predicting Sense of Agency over Muscle Movements

John P. Veillette, Pedro Lopes, Howard C. Nusbaum. In Proc. Journal of Neuroscience'23 (full paper)

We investigate the time course of neural activity that predicts the sense of agency over electrically actuated movements. We find evidence of two distinct neural processes–a transient sequence of patterns that begins in the early sensorineural response to muscle stimulation and a later, sustained signature of agency. This work is part of our lab's exploration on how future interactive devices need be designed to prioritize the user's sense of agency, read more at our project's page (we have six other papers on this topic).

Jneuro'23 paper codeProject's team

(some of) our collaborators:

Shunichi Kasahara

(Sony CSL)

John P. Veillette

(UChicago)

Howard Nusbaum

(UChicago)

Jakub Limanowski

(UCL)

Karl Friston

(UCL)

Felix Blankenburg

(FU Berlin)

Klaus Grammann

(TU Berlin)

(see complete list of collaborators for this project in each paper's author list).

Press on our agency in haptics work

- CNBC full feature on our work

- Gizmodo (full press article about Preemptive Action)

- IEEE Spectrum (full press article about Preemptive Action)

- Boing Boing (article about Preemptive Action)

- Tech Xplore (full press article about Preemptive Action)

- Business Today article about our EMS work with agency

- Spektrum (German) article about our agency work

- SIGCHI Medium (article by Pedro Lopes about the research questions behind our two 2019 CHI papers)

- HiTecher (press article about Preemptive Action)

- Medical Gadget (press article about Preemptive Action)

- My24 online newspaper (article about Preemptive Action)

- MobiGeek (article about Preemptive Action)

- UChicago News (full article about agency in EMS, including our Cerebral Cortex and two 2019 CHI papers)

- UChicago CS (full article about agency in EMS, including our Cerebral Cortex and two 2019 CHI papers)

- Reddit Thread (no research can be complete without people on reddit discussing it!)

A more exhaustive press coverage list of our lab's projects can be found here.